Imagine describing an image in plain English—“a cozy reading room inside a tree, warm lighting, digital art style”—and instantly getting a visual that matches your imagination. No design tools, no sketching, no photo editing. Just words turning into images. That’s the problem DALL·E solves.

DALL·E is an AI image generation system created by OpenAI that converts text descriptions into original images. It allows users to generate illustrations, concept art, realistic scenes, and creative visuals simply by describing what they want to see.

What makes DALL·E especially powerful is its ability to understand both language and visual concepts at the same time. It doesn’t just create random images—it interprets context, style, composition, and relationships between objects in a surprisingly human-like way.

By the end of this guide, you’ll understand what DALL·E is, how it works step by step, its business model, key features, the technology behind it, and why many entrepreneurs aim to build DALL·E-like image generation platforms—and how Miracuves can help make that possible.

What Is DALL·E? The Simple Explanation

DALL·E is an AI image generation system that creates images from text descriptions. In simple terms, you type what you want to see, and DALL·E generates a brand-new image that matches your description—whether it’s realistic, artistic, or completely imaginative.

The Core Problem DALL·E Solves

Creating visuals usually requires design skills, time, and specialized software. DALL·E removes those barriers by:

- Turning ideas into images instantly

- Eliminating the need for drawing or photo editing skills

- Making visual creation accessible to non-designers

- Speeding up creative experimentation

It lets people focus on ideas and storytelling, not tools.

Target Users and Use Cases

DALL·E is commonly used by:

• Designers and creative teams for concept exploration

• Marketers creating campaign visuals

• Writers visualizing scenes and characters

• Educators creating illustrative content

• Product teams prototyping ideas quickly

• Individuals experimenting with AI art

Current Market Position

DALL·E is positioned as a mainstream, easy-to-use AI image generator. It’s known for strong language understanding, reliable outputs, and tight integration into broader AI workflows, making it accessible to beginners while still useful for professionals.

Why It Became Successful

DALL·E became popular because it made AI image generation simple and intuitive. Users didn’t need to learn complex parameters—clear natural language was enough to get impressive results.

How DALL·E Works — Step-by-Step Breakdown

For Users (Creators, Marketers, Designers)

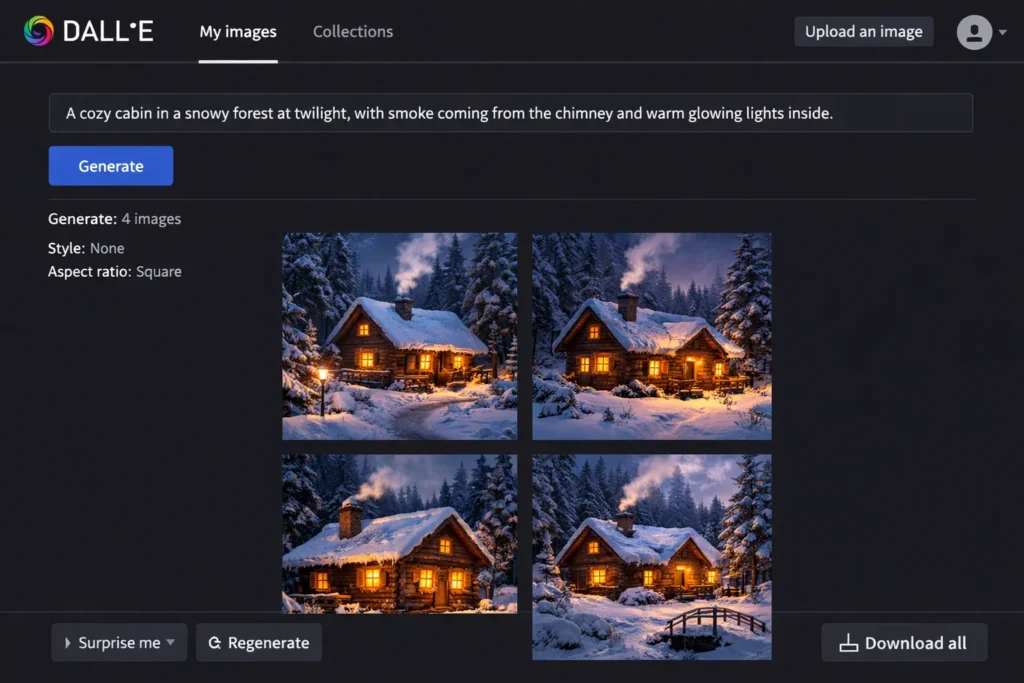

Getting started

Users interact with DALL·E through a simple interface where they type a text description of the image they want. There’s no need to adjust technical settings—natural language is enough to begin.

Writing a prompt

A prompt usually includes:

- Subject (what should appear in the image)

- Context (environment, action, relationships)

- Style (photorealistic, illustration, watercolor, 3D, etc.)

- Mood or details (lighting, colors, perspective)

Clear prompts lead to more accurate and visually appealing results.

Image generation

Once the prompt is submitted, DALL·E:

- Interprets the text and visual intent

- Understands how objects relate to each other

- Generates one or more original images from scratch

- Delivers results within seconds

Each image is newly created, not copied from existing pictures.

Refining and iterating

Users can improve results by:

- Rewriting or adding detail to the prompt

- Asking for variations of an image

- Adjusting style or composition through language

- Regenerating until the desired outcome is achieved

This iterative loop makes DALL·E feel like a creative partner.

Typical user journey

User writes prompt → AI generates images → user reviews results → refines prompt → final image is produced.

For Advanced Use Cases

Image editing and variations

DALL·E supports modifying existing images by changing or replacing specific parts using text instructions, helping users refine visuals without starting over.

Consistency across outputs

With careful prompting, users can guide DALL·E toward consistent styles or themes across multiple images—useful for branding or storytelling.

Technical Overview (Simple)

DALL·E combines:

- Language understanding (to read prompts)

- Visual concept learning (to understand objects and scenes)

- Image generation models (to create pixels from ideas)

This allows it to translate words into coherent visuals with strong alignment to user intent.

Read More :- How to Develop an AI Chatbot Platform

Key Features That Make DALL·E Successful

Natural language understanding

DALL·E is especially good at understanding plain English descriptions. Users don’t need technical commands—simple, descriptive language is enough to guide image creation accurately.

Strong alignment between text and visuals

The system understands relationships between objects, actions, and environments. This means prompts like “a cat sitting on a stack of books in a quiet library” produce images where elements make sense together, not random combinations.

Wide range of visual styles

DALL·E can generate images in many styles, including:

- Photorealistic scenes

- Digital illustrations

- Hand-drawn or painted looks

- Minimalist or abstract visuals

- Cartoon and stylized art

This versatility makes it useful across many creative needs.

Image variation and iteration

Users can generate multiple variations from a single idea. This supports creative exploration and helps users refine concepts without starting over.

Image editing with text instructions

DALL·E allows users to modify parts of an image using text guidance—such as changing an object, adjusting style, or altering details—while keeping the rest of the image intact.

Beginner-friendly experience

One of DALL·E’s strengths is accessibility. New users can get strong results without learning complex parameters, which lowers the barrier to entry.

Reliable and consistent outputs

Compared to early image generators, DALL·E tends to produce more consistent, usable images, making it practical for real-world creative workflows.

Broad creative use cases

DALL·E is used for:

- Marketing visuals and ads

- Concept art and mood boards

- Educational illustrations

- Product mockups

- Social media content

- Story and character visualization

Integration into AI workflows

DALL·E fits naturally into broader AI workflows, often combined with text generation, brainstorming, and editing—making it part of a larger creative pipeline.

Focus on safety and responsible use

Built-in safeguards help reduce harmful or misleading outputs, which is important for businesses and public-facing content.

The Technology Behind DALL·E

Tech stack overview (simplified)

DALL·E is built on generative AI for images, which means it can create brand-new visuals from scratch based on what you describe in words. At a high level, it combines:

- A language understanding layer (to interpret your prompt)

- An image generation model (to produce the picture)

- Optional tools for edits and variations (to refine existing images)

- Safety systems that block or reduce harmful requests

OpenAI has iterated across versions (DALL·E, DALL·E 2, DALL·E 3) to improve image quality, prompt understanding, and safety controls.

How text becomes an image

In simple terms, DALL·E learns patterns connecting words to visual concepts (like “golden hour lighting,” “wide-angle street photo,” or “watercolor illustration”). When you submit a prompt, it uses those learned patterns to generate an image that matches the description, including objects, style, and composition.

The original DALL·E approach is described as a transformer that processes text and image information together as tokens, enabling the model to connect language to visual structure.

Generations, edits, and variations

DALL·E is often used through an “image generation” workflow with three common capabilities:

- Generations: Create an image from a text prompt

- Edits: Modify an existing image using a new prompt (change part of it or restyle it)

- Variations: Produce alternate versions of an existing image (not always available for every model/version)

This is why DALL·E feels practical for real projects: you don’t just generate once—you iterate.

Why DALL·E 3 feels more “prompt-smart”

DALL·E 3 is designed to understand more nuance and detail in prompts than earlier versions, which helps it follow complex instructions more accurately.

Also, when using the DALL·E 3 API, prompts may be automatically expanded into more detailed instructions to improve results (similar to how prompt enhancement works in ChatGPT-style experiences).

Safety, moderation, and bias controls

Because image generation can be misused, DALL·E includes safeguards. For example, OpenAI describes mitigations for DALL·E 3 that can decline certain requests (like public figures by name) and broader safety work across risk areas.

Why this tech matters for business

DALL·E’s real business value is speed and iteration: teams can go from idea → visual → refinement in minutes. That makes it useful for:

- Campaign concepts and ad creatives

- Product mockups and brand exploration

- Storyboarding and concept art

- Educational and editorial visuals

DALL·E’s Impact & Market Opportunity

Industry impact

DALL·E helped bring text-to-image generation into the mainstream. By making image creation as simple as writing a sentence, it changed how non-designers, marketers, educators, and product teams approach visuals. What once required design software and skills now starts with language.

It also shifted creative workflows. Instead of spending days on early concepts, teams can generate dozens of visual directions in minutes, then refine the strongest ideas. This made AI imagery a front-end ideation tool, not just a novelty.

Market demand and growth drivers

Demand for DALL·E-style image generation is driven by:

- Explosion of visual content across social, ads, and web

- Faster content cycles in marketing and media

- Rising costs and time constraints in traditional design

- Growth of no-code and low-code creative tools

- Increasing comfort with AI-assisted creativity

As visuals become central to communication, text-to-image tools move from optional to essential.

User segments and behavior

DALL·E is widely used by:

- Marketing teams creating campaign visuals

- Product teams prototyping ideas

- Educators and publishers generating illustrations

- Creators and influencers producing content

- Individuals exploring AI-assisted art

A common behavior pattern is rapid iteration. Users generate, tweak, regenerate, and refine multiple times before settling on a final image.

Business and enterprise adoption

Businesses value DALL·E because it:

- Reduces dependency on external design for early stages

- Speeds up brainstorming and concept validation

- Lowers cost of experimentation

- Integrates well into broader AI workflows

It’s often used alongside writing and planning tools as part of a single creative pipeline.

Future direction

Text-to-image platforms like DALL·E are evolving toward:

- Better consistency across multiple images

- Stronger style and brand control

- Higher reliability for commercial use

- Deeper integration into design and marketing tools

- Multimodal workflows combining text, image, and layout

Opportunities for entrepreneurs

There are strong opportunities to build DALL·E-inspired platforms for:

- Brand-safe image generation for businesses

- Industry-specific visual tools (real estate, e-commerce, education)

- Marketing and ad creative automation

- Product mockup and visualization tools

- Embedded image generation inside SaaS products

This massive success is why many entrepreneurs explore building image-generation platforms—language-driven creativity has unlocked an entirely new market layer.

Building Your Own DALL·E-Like Platform

Why businesses want DALL·E-style image platforms

DALL·E shows that language-driven creativity scales extremely well. Businesses are drawn to this model because:

- Visual content is required everywhere (marketing, ads, product, social)

- Text input lowers the learning curve for users

- Faster ideation leads to quicker decision-making

- AI reduces early-stage design costs

- Usage-based models scale naturally with demand

Instead of replacing designers, these platforms accelerate creative workflows.

Key considerations before development

If you plan to build a DALL·E-like platform, you should define:

- Target users (creators, marketers, SMBs, enterprises)

- Image style focus (realistic, artistic, branded, niche-specific)

- Prompt simplicity vs advanced controls

- Image editing and variation capabilities

- Safety, moderation, and content rules

- Licensing and commercial usage policies

- API vs end-user application strategy

Clear positioning avoids competing purely on “generic image generation.”

Read Also :- How to Market an AI Chatbot Platform Successfully After Launch

Cost Factors & Pricing Breakdown

DALL·E–Like App Development — Market Price

| Development Level | Inclusions | Estimated Market Price (USD) |

|---|---|---|

| 1. Basic AI Image Generation MVP | Core web interface for text-to-image prompts, user registration & login, integration with a single image-generation model/API, prompt → image generation flow, basic image gallery & downloads, simple rate limiting, minimal safety filters, standard admin panel, basic usage analytics | $90,000 |

| 2. Mid-Level AI Image Creation Platform | Advanced prompt controls (styles, sizes, variations), image editing (regenerate, inpaint/outpaint basics), user workspaces, collections & favorites, queue management, credits/usage tracking, stronger safety & moderation hooks, analytics dashboard, polished web UI and mobile-ready experience | $180,000 |

| 3. Advanced DALL·E-Level Generative Image Ecosystem | Large-scale multi-tenant image generation platform with high-concurrency pipelines, advanced editing tools, prompt versioning, model routing, credits/subscription billing, enterprise orgs & RBAC, detailed observability, robust moderation & policy enforcement, cloud-native scalable architecture | $280,000+ |

DALL·E-Style AI Image Generation Platform Development

The prices above reflect the global market cost of developing a DALL·E-like AI image generation platform — typically ranging from $90,000 to over $280,000, with a delivery timeline of around 4–12 months for a full, from-scratch build. This usually includes model integration, prompt processing, image pipelines, safety and policy enforcement, usage metering, analytics, and production-grade infrastructure capable of handling high user demand.

Miracuves Pricing for a DALL·E–Like Custom Platform

Miracuves Price: Starts at $15,999

This is positioned for a feature-rich, JS-based DALL·E-style AI image generation platform that can include:

- Text-to-image generation via your chosen AI models or APIs

- Prompt controls (styles, sizes, variations) and basic image editing workflows

- User accounts, image history, favorites, and collections

- Usage and credit tracking with optional subscription or pay-per-use billing

- Core moderation and safety hooks aligned with AI content policies

- A modern, responsive web interface plus optional companion mobile apps

From this foundation, the platform can be extended into advanced image editing, enterprise workspaces, richer moderation tooling, custom model hosting, and large-scale SaaS deployments as your AI product matures.

Note: This includes full non-encrypted source code (complete ownership), complete deployment support, backend & API setup, admin panel configuration, and assistance with publishing on the Google Play Store and Apple App Store—ensuring you receive a fully operational AI image generation ecosystem ready for launch and future expansion.

Delivery Timeline for a DALL·E–Like Platform with Miracuves

For a DALL·E-style, JS-based custom build, the typical delivery timeline with Miracuves is 30–90 days, depending on:

- Depth of generation and editing features (variations, inpainting, controls, etc.)

- Number and complexity of AI model, storage/CDN, billing, and moderation integrations

- Complexity of usage limits, analytics, and governance requirements

- Scope of web portal, mobile apps, branding, and long-term scalability targets

Tech Stack

We preferably will be using JavaScript for building the entire solution (Node.js / Nest.js / Next.js for the web backend + frontend) and Flutter / React Native for mobile apps, considering speed, scalability, and the benefit of one codebase serving multiple platforms.

Other technology stacks can be discussed and arranged upon request when you contact our team, ensuring they align with your internal preferences, compliance needs, and infrastructure choices.

Essential features to include

A strong DALL·E-style MVP should include:

- Text-to-image prompt interface

- Multiple image outputs per prompt

- Image variations and regeneration

- Edit-by-prompt functionality

- Image history and reuse

- Usage limits and billing logic

- Fast, simple creative workflow

High-impact additions later:

- Brand-style consistency controls

- Team collaboration and asset libraries

- Commercial licensing management

- API access for product integrations

- Expansion into video or layout generation

Read More :- AI Chat Assistant Development Costs: What Startups Need to Know

Conclusion

DALL·E made one thing very clear: creativity doesn’t need complex tools anymore—it needs clear ideas. By turning everyday language into visuals, it changed how people brainstorm, design, and communicate. The simplicity of typing a prompt and getting usable images is what turned AI image generation from a research concept into a practical creative tool.

For founders and product teams, DALL·E proves that platforms sitting at the intersection of language and visuals unlock massive value. When creativity becomes faster, cheaper, and more accessible, entirely new products, workflows, and businesses emerge around it.

FAQs :-

What is DALL·E used for?

DALL·E is used to create images from text descriptions, including illustrations, concept art, marketing visuals, product mockups, educational images, and creative artwork.

How does DALL·E make money?

DALL·E is monetized through usage-based pricing and paid plans, where users or developers pay for image generations as part of broader AI access.

Is DALL·E free to use?

DALL·E typically offers limited access through paid plans or credits. Regular or large-scale usage usually requires a subscription or usage fees.

What makes DALL·E different from other AI image generators?

DALL·E is known for strong language understanding and reliable prompt alignment, making it easier for users to get images that closely match detailed descriptions.

Do I need design skills to use DALL·E?

No. DALL·E is designed so anyone can generate images using natural language, without design or illustration experience.

Can DALL·E images be used for commercial purposes?

Commercial usage depends on the terms of the plan being used. Many businesses use DALL·E-generated images for marketing, content, and product concepts.

Can DALL·E edit existing images?

Yes. DALL·E supports image editing and variations, allowing users to modify parts of an image using text instructions.

How long does it take to generate an image?

Image generation usually takes a few seconds, depending on demand and usage tier.

Is DALL·E suitable for businesses and teams?

Yes. Businesses use DALL·E for rapid visual ideation, marketing assets, and creative exploration, often alongside other AI tools.

Can I build a platform like DALL·E?

Yes. DALL·E-style platforms can be built by combining text-to-image models, prompt handling, image refinement tools, and scalable infrastructure.

How can Miracuves help build a DALL·E-like platform?

Miracuves helps founders and businesses build AI image generation platforms with text-to-image engines, prompt workflows, editing tools, billing systems, and enterprise-ready infrastructure—allowing you to launch a DALL·E-style product quickly and scale with confidence.