Imagine you’ve found a great open-source AI model (for chat, vision, or audio)… but then reality hits: where do you host it, version it, share it with your team, demo it to clients, and push it into production without weeks of setup?

That “from model to real product” gap is exactly what Hugging Face solves.

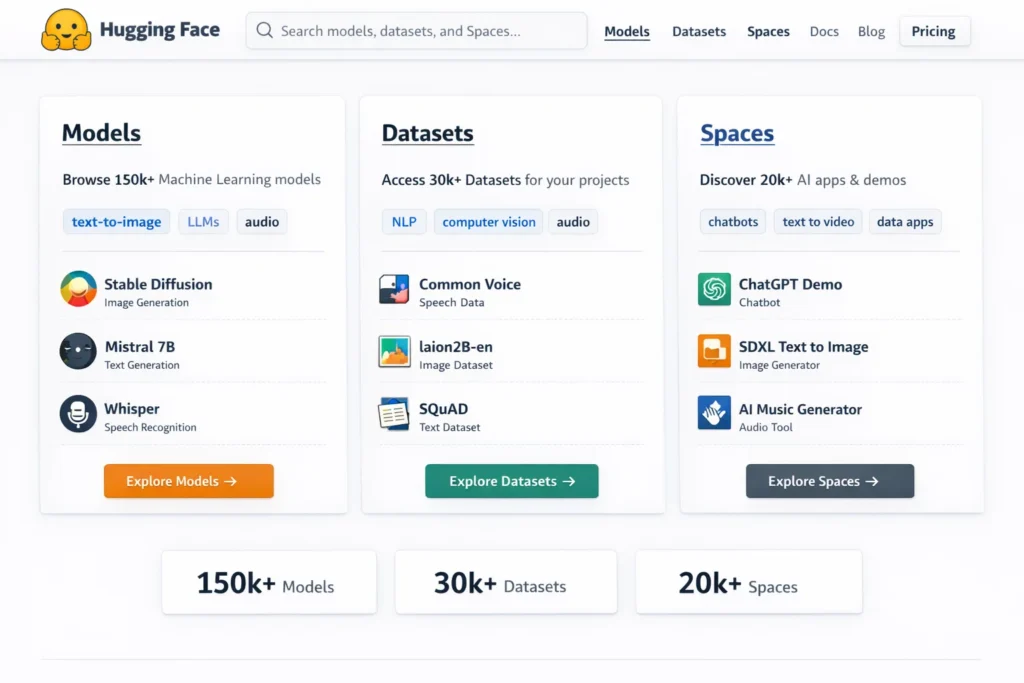

At its core, Hugging Face is a collaboration platform for the machine learning community—like a central home where people publish and discover models, datasets, and live demos (Spaces). The Hub documentation describes it as having 2M+ models, 500k datasets, and 1M demos—which hints at how massive the ecosystem has become.

Quick origin story: Hugging Face started in 2016 as a chatbot-focused startup, then pivoted toward open-source ML tools and the platform after realizing the bigger opportunity was enabling developers and companies to build with models more easily.

By the end of this guide, you’ll clearly understand what Hugging Face is, how it works step by step, how it makes money, the features that made it successful, and what it takes to build a similar platform—plus how Miracuves can help you launch a Hugging Face-like AI marketplace + deployment platform faster.

What Is Hugging Face? The Simple Explanation

Hugging Face is a platform where the AI community builds and shares machine learning assets—mainly models, datasets, and live demo apps. Think of it like a “GitHub for AI,” where you can discover a model, pull it into your project, and even deploy it—without building everything from scratch.

The core problem it solves

AI projects usually get stuck on boring-but-hard problems like:

- “Which model should we use?”

- “Where do we store and version models and datasets?”

- “How do we demo it to stakeholders?”

- “How do we deploy it reliably in production?”

Hugging Face makes those steps easier by giving you one place to:

- Find and compare models for many tasks (text, vision, audio, etc.)

- Access and manage datasets

- Host demos (so others can try your model instantly)

- Deploy models using managed services

Target users and common use cases

Who uses Hugging Face:

- Developers building AI features into apps

- ML engineers training or fine-tuning models

- Researchers publishing open models and benchmarks

- Startups and enterprises deploying AI to production

Common use cases:

- Discovering open-source LLMs and embedding models

- Using datasets for training/evaluation

- Creating a quick demo app to share with a team/client (Spaces)

- Deploying a model as an API endpoint (Inference Endpoints)

Current market position with stats

Hugging Face Hub describes itself as a massive ecosystem with 2M+ models, 500k datasets, and 1M demos—which shows how central it has become for open ML collaboration.

Why it became successful

- It made sharing AI models feel as easy as sharing code (repos, versioning, collaboration)

- It built a “one-stop shop” for models + datasets + demos, so teams don’t have to stitch together five different tools

- It offers a clear path from experiment → demo → deployment via products like Spaces and Inference Endpoints

How Does Hugging Face Work? Step-by-Step Breakdown

For users (developers, ML engineers, product teams)

1) Create an account and pick what you want to build

Most people start on the Hugging Face Hub and choose one of three paths:

- Use an existing model

- Use a dataset

- Create a demo app (Space)

The Hub is designed as a central place to share, discover, and collaborate on ML assets.

2) Find a model (or dataset) that matches your task

You browse/search models by task (text generation, classification, image, audio, etc.), compare options, and open a model page to see:

- What it’s good at

- How to run it

- License/usage details

- Example code and files (like configs, weights, README)

This “model repo” pattern is a big reason people call it “GitHub for models.”

3) Run the model locally in your project

If you’re building an app, you’ll usually pull the model into your code using Hugging Face libraries (for example, Transformers). These libraries provide standard ways to load models and run inference or training across many modalities.

Typical workflow:

- Install library

- Load a model by name

- Send input → get output

- Wrap it in your product logic (UI, pipeline, API)

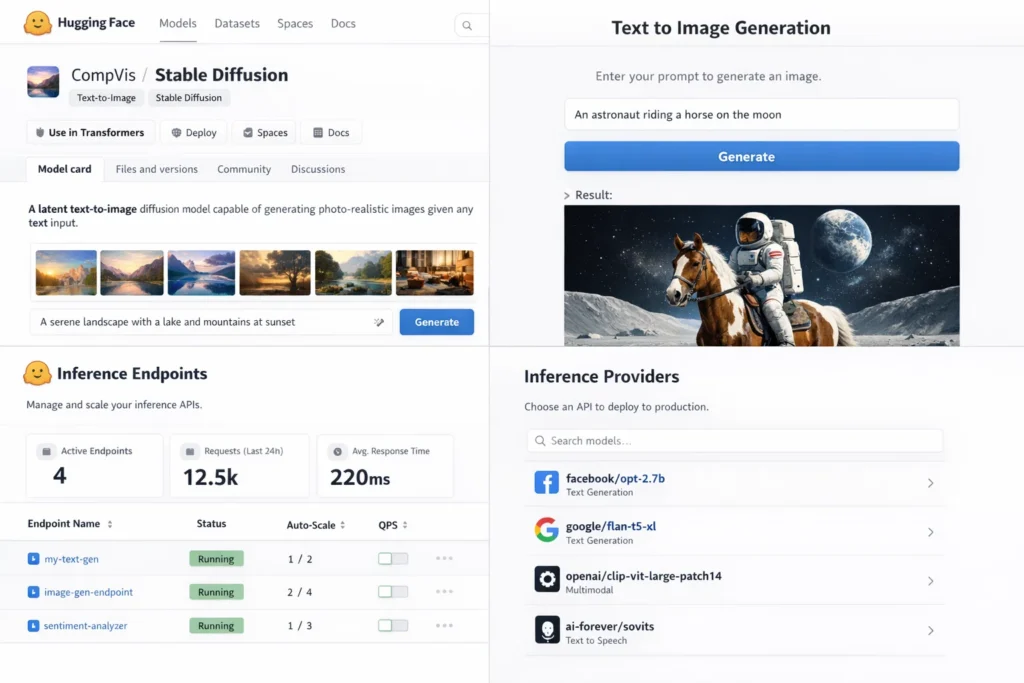

4) Turn it into a shareable demo using Spaces

Spaces are Hugging Face’s “demo hosting” product: you can create an interactive app (often with Gradio or Streamlit) so anyone can try your model in the browser. Hugging Face describes Spaces as a way to create and deploy ML demos quickly, and provides SDK docs for Gradio and Streamlit Spaces.

A simple Space flow looks like:

- Create a Space repo

- Add your app code (Gradio/Streamlit/Docker)

- Push changes (Git-style)

- Hugging Face builds and hosts the demo

5) Deploy to production using Inference Endpoints

When you need reliability, autoscaling, and a production API, Hugging Face offers Inference Endpoints. Their docs position it as a managed way to deploy models to production without spending weeks on infrastructure work.

Typical production flow:

- Choose a model from the Hub

- Configure hardware/region/replicas

- Deploy an endpoint

- Call it from your app like any other API

6) Understand how costs work (so you don’t get surprised)

Inference Endpoints are pay-as-you-go and billed based on compute over time (prices shown hourly, billed by the minute), with costs influenced by instance type and number of replicas.

For “service providers” (model creators and teams publishing assets)

Publishing a model or dataset to the Hub

If you train or fine-tune a model, you can publish it to the Hub as a repository so others can:

- Discover it

- Reproduce results

- Download and run it

- Build on top of it

This is part of what made the ecosystem explode in size.

Optional: provide a live demo (Space) or a production endpoint

Creators often pair a model repo with:

- A Space for “try it now” demos

- An Inference Endpoint for production-style usage

Technical Overview (Simple)

Think of Hugging Face as a pipeline:

- Hub = storage + discovery + collaboration for models/datasets/Spaces

- Libraries (like Transformers) = easy tools to load and run models

- Spaces = hosted demos (Gradio/Streamlit/Docker)

- Inference Endpoints = managed production deployment + autoscaling

Read More :- How to Develop an AI Chatbot Platform

Hugging Face’s Business Model Explained

How Hugging Face makes money (all revenue streams)

Hugging Face monetizes through a mix of subscriptions + usage-based compute services:

- Hub subscriptions for individuals and teams (Pro/Team/Enterprise)

- Production deployment services (Inference Endpoints) billed by compute time

- “Inference Providers” pay-as-you-go access to hosted models from multiple providers (with centralized billing)

- Other compute features on the platform (for example, Jobs billed by hardware usage)

Pricing structure (current plan style)

Hugging Face positions its paid offerings in two big buckets:

1) Hub subscriptions (accounts and orgs)

- Team subscription is shown starting at $20/user/month

- Enterprise is “contact sales” with flexible contracts

2) Compute usage (deployment/inference hardware)

- Inference Endpoints (dedicated) start from about $0.033/hour (instance-based pricing)

How deployment costs work (important detail)

For Inference Endpoints, Hugging Face shows prices by the hour, but documents that billing is calculated by the minute. Your cost depends on the instance type (CPU/GPU), replicas, and how long the endpoint is running.

Revenue streams in plain language

- Subscription revenue: teams pay per seat for collaboration, security, governance, and storage (especially for private repos)

- Compute revenue: customers pay for managed infrastructure to deploy models as production APIs (Inference Endpoints)

- Usage-based inference marketplace: customers pay as they use models via Inference Providers, with transparent pay-as-you-go pricing

- Optional compute tasks: features like Jobs are billed by hardware time (computed by the minute)

Market size and growth signals

Hugging Face Hub describes its ecosystem scale as 2M+ models, 500k datasets, and 1M demos, which is a strong signal of network effects: more creators attract more builders, which attracts more enterprise adoption and compute usage.

Profit margin insights

Hugging Face’s profitability typically improves when:

- Organizations expand seats (Team/Enterprise) and store more private assets

- Production deployments grow (more endpoints, larger hardware, longer uptime)

- Customers consolidate inference usage through Inference Providers with centralized billing

Revenue model breakdown

| Revenue Stream | Description | Who Pays | Nature |

|---|---|---|---|

| Hub subscriptions | Pro/Team/Enterprise access, collaboration, controls | Individuals & orgs | Recurring |

| Inference Endpoints | Dedicated, autoscaling production deployments | Product teams | Usage-based compute |

| Inference Providers | Pay-as-you-go model access via providers | Builders & teams | Usage-based |

| Jobs / compute tasks | Run tasks on HF infra, billed by minute | Pro/Team/Enterprise users | Usage-based |

Key Features That Make Hugging Face Successful

1) The Hub for models, datasets, and demos

Why it matters: It gives the AI community one place to publish, discover, and reuse ML assets.

How it benefits users: You can browse and pick from a massive ecosystem instead of starting from zero.

Technical innovation: Everything is organized like repositories with collaboration and discoverability baked in.

2) Git-style repositories and version control

Why it matters: ML work changes constantly (new weights, new dataset versions, new evaluation results).

How it benefits users: You can track versions, roll back, collaborate, and ship updates safely.

Technical innovation: Models, datasets, and Spaces are hosted as Git repositories on the Hub.

3) “Spaces” for instant demos people can actually try

Why it matters: A demo makes your model real for stakeholders, customers, and the community.

How it benefits users: You can share a working app link instead of screenshots or notebooks.

Technical innovation: Spaces are repositories too, so you can develop like software and deploy automatically.

4) Gradio and Streamlit support for fast app building

Why it matters: Most teams want a UI fast, not weeks of frontend work.

How it benefits users: You can build interactive ML apps in Python and host them quickly.

Technical innovation: Hugging Face provides dedicated Space SDK docs for Streamlit and for Gradio-based Spaces.

5) Inference Endpoints for production deployment

Why it matters: Demos are great, but products need reliability, scaling, and predictable ops.

How it benefits users: You can deploy models as managed endpoints without building infra from scratch.

Technical innovation: Dedicated endpoints on autoscaling infrastructure, configured directly from the Hub.

6) Clear compute-based pricing for deployments

Why it matters: Hosting models can get expensive if pricing is unclear.

How it benefits users: You can estimate cost based on instance type and uptime.

Technical innovation: Pricing is shown hourly, with cost calculated by the minute for Inference Endpoints.

7) Inference Providers for “try models without running servers”

Why it matters: Sometimes you want to evaluate models quickly without deploying anything.

How it benefits users: You get serverless-style access to many models with centralized billing.

Technical innovation: Hugging Face frames this as access to 200+ models from providers with pay-as-you-go pricing.

8) A single consistent ecosystem for experimenting and shipping

Why it matters: AI teams hate glue-work between tools.

How it benefits users: One platform can cover discover → build → demo → deploy.

Technical innovation: Hub + repos + Spaces + Endpoints are designed to connect as one workflow.

9) Collaboration for teams and organizations

Why it matters: Companies need private workspaces, permissions, and governance.

How it benefits users: Teams can work on private repos, share internally, and control visibility.

Technical innovation: The Hub is built around collaborative repo workflows (branches/tags/visibility controls).

10) Strong network effects (the “more people share, the better it gets” effect)

Why it matters: More models + datasets + demos means more starting points for every new project.

How it benefits users: Faster iteration, easier benchmarking, quicker prototyping.

Technical innovation: Hugging Face explicitly highlights the scale of the Hub (models, datasets, demos).

Recent platform shifts and “what’s new” direction

A big recent directional shift is how Hugging Face is emphasizing two paths side-by-side:

- Inference Providers for quick evaluation across many hosted options

- Inference Endpoints for dedicated production deployments with monitoring and a metrics dashboard experience

AI/ML integrations that matter

Hugging Face’s “integration” story is less about one AI feature and more about making models usable end-to-end: repository-based sharing, demo hosting, and managed deployment—so AI moves from “artifact” to “product.”

Hugging Face’s Impact & Market Opportunity

Industry disruption it caused

Hugging Face didn’t just popularize a few tools—it changed how teams ship AI. Before, sharing a model often meant a research paper + a messy repo + “good luck running it.” Hugging Face made the default expectation:

- models should be discoverable

- demos should be clickable

- deployments should be repeatable

- collaboration should feel like software development

That’s a big shift because it moved AI from “research artifact” to “usable product building block.”

Market demand and growth drivers

The demand behind Hugging Face-style platforms is powered by a few huge trends:

- Companies want AI features but don’t want to reinvent infrastructure

- Open-source models keep improving and multiplying

- Teams need faster ways to evaluate, compare, and deploy models

- Demos are now part of sales, fundraising, and stakeholder buy-in

- Production deployments need reliability, cost control, and governance

So the platform is sitting right in the path of both developer adoption and enterprise adoption.

User demographics and behavior

Hugging Face attracts a wide set of users, but their behavior tends to cluster into a few “loops”:

- Explore and compare models → pick one → run locally → iterate

- Publish a model → attach a demo → get feedback → improve

- Benchmark multiple models quickly → choose the best tradeoff → deploy an endpoint

- Build a demo for a client/stakeholder → convert that demo into a production workflow

In other words: people use it as a continuous pipeline, not a one-time download site.

Geographic presence

Because it’s a cloud platform and a global open-source community, adoption isn’t tied to one region. You’ll see usage from startups, universities, and enterprises worldwide.

Future projections

Where this category is heading:

- More “model marketplaces” with stronger evaluation and trust signals

- Easier production rollouts (scaling, monitoring, guardrails)

- More enterprise governance (permissions, audit logs, private registries)

- More end-to-end workflows that connect datasets → training → evaluation → deployment

Opportunities for entrepreneurs

This massive success is why many entrepreneurs want to create similar platforms—because the market rewards anything that helps teams:

- discover the right model faster

- prove it works via a demo

- deploy it without heavy infra work

- operate it safely and cost-effectively

This massive success is why many entrepreneurs want to create similar platforms…

Building Your Own Hugging Face–Like Platform

Why businesses want Hugging Face–style platforms

Hugging Face proved there’s massive demand for a “single home” where AI assets can be discovered, shared, tested, and deployed. Businesses want similar platforms because:

- AI teams waste time stitching tools together (storage, versioning, demos, deployment)

- Leaders want a reliable way to reuse models internally across teams

- Startups and agencies want quick demos to win clients and funding

- Enterprise teams want governance, privacy, and repeatability

- The open-source model ecosystem is growing too fast to manage manually

Key considerations for development

If you want to build a Hugging Face-like platform, the important building blocks are:

- Model registry and discovery (search, filters, categories)

- Dataset hosting and versioning

- Repo-style lifecycle (commits, releases, permissions, tags)

- Demo hosting (Spaces-like environment for interactive apps)

- Deployment layer (API endpoints with scaling options)

- Billing and usage tracking for compute

- Community and trust elements (docs, licenses, ratings, safety notes)

Read Also :- How to Market an AI Chatbot Platform Successfully After Launch

Cost Factors & Pricing Breakdown

Hugging Face–Like App Development — Market Price

| Development Level | Inclusions | Estimated Market Price (USD) |

|---|---|---|

| 1. Basic AI Model Hosting & API MVP | Core web platform for hosting and calling ML/AI models, user registration & login, basic model upload or API connection, simple inference endpoints, basic documentation portal, API key management, minimal moderation & safety filters, standard admin panel, basic usage analytics | $100,000 |

| 2. Mid-Level AI Model Platform & Developer Hub | Multi-model hosting support, model versioning, dataset storage basics, inference API endpoints (text/image/audio), usage dashboards, workspace/projects, access control, SDK samples, stronger moderation hooks, credits/usage tracking, analytics dashboard, polished web UI and developer console | $200,000 |

| 3. Advanced Hugging Face–Level AI Platform Ecosystem | Large-scale multi-tenant ML platform with model hub, dataset hub, fine-tuning pipelines, enterprise orgs & RBAC/SSO, model marketplace, inference scaling infrastructure, API gateway, audit logs, advanced observability, cloud-native scalable architecture, robust safety & policy enforcement | $350,000+ |

Hugging Face–Style AI Model Platform Development

The prices above reflect the global market cost of developing a Hugging Face–like AI model hosting, sharing, and inference platform — typically ranging from $100,000 to over $350,000+, with a delivery timeline of around 4–12 months for a full, from-scratch build. This usually includes model hosting infrastructure, dataset management, inference APIs, developer tooling, analytics, governance controls, and production-grade cloud infrastructure capable of handling high compute workloads.

Miracuves Pricing for a Hugging Face–Like Custom Platform

Miracuves Price: Starts at $15,999

This is positioned for a feature-rich, JS-based Hugging Face-style AI platform that can include:

- AI model hosting or external model API integrations

- Model inference APIs for text, image, or audio use cases

- Developer portal with API documentation and usage dashboards

- User accounts, projects/workspaces, and access controls

- Usage and credit tracking with optional subscription or pay-per-use billing

- Core moderation, compliance, and safety hooks

- A modern, responsive web interface plus optional companion mobile apps

From this foundation, the platform can be extended into model marketplaces, dataset hosting, fine-tuning workflows, enterprise AI governance, and large-scale AI SaaS deployments as your AI ecosystem matures.

Note: This includes full non-encrypted source code (complete ownership), complete deployment support, backend & API setup, admin panel configuration, and assistance with publishing on the Google Play Store and Apple App Store—ensuring you receive a fully operational AI platform ecosystem ready for launch and future expansion.

Delivery Timeline for a Hugging Face–Like Platform with Miracuves

For a Hugging Face-style, JS-based custom build, the typical delivery timeline with Miracuves is 30–90 days, depending on:

- Depth of AI model hosting and inference features

- Number and complexity of model, storage, billing, and moderation integrations

- Complexity of enterprise features (RBAC, SSO, governance, audit logs)

- Scope of web portal, mobile apps, branding, and long-term scalability targets

Tech Stack

We preferably will be using JavaScript for building the entire solution (Node.js / Nest.js / Next.js for the web backend + frontend) and Flutter / React Native for mobile apps, considering speed, scalability, and the benefit of one codebase serving multiple platforms.

Other technology stacks can be discussed and arranged upon request when you contact our team, ensuring they align with your internal preferences, compliance needs, and infrastructure choices.

Essential features to include

A strong Hugging Face-style MVP should include:

- Public/private model repositories

- Metadata pages (README, model card, usage examples)

- Search + category browsing

- Basic dataset hosting

- Demo hosting for at least one framework (like a “Space”)

- Simple deployment option (one-click endpoint)

- Usage tracking and billing

High-impact extensions later:

- Benchmarking and evaluation dashboards

- Built-in safety scanning and license compliance tools

- Multi-region deployments

- Enterprise governance and audit logs

- Provider marketplace (serverless inference)

Read More :- AI Chat Assistant Development Costs: What Startups Need to Know

Conclusion

Hugging Face became the default “home” for open AI because it solved a very real pain: AI is useless if it stays trapped in notebooks. By making models and datasets shareable like software repos—and pairing that with demos and deployment options—it made AI feel practical, reusable, and collaborative.

For founders and builders, the big lesson is this: the winners in AI aren’t only the teams with the best models. The winners are the teams that make models easy to find, easy to trust, easy to demo, and easy to ship into real products.

FAQs :-

How does Hugging Face make money?

Hugging Face makes money through Hub subscriptions (Team/Enterprise) and compute-based services like Inference Endpoints and Inference Providers that charge based on usage and infrastructure.

Is Hugging Face free to use?

Yes. Many parts of Hugging Face are free, especially browsing public models and datasets. Paid plans unlock more collaboration, private assets, and enterprise features.

What is Hugging Face mainly used for?

Hugging Face is used to discover, share, and deploy AI models and datasets, and to build demos using Spaces.

What are Hugging Face Spaces?

Spaces are hosted demo apps where you can run and share interactive AI applications built using frameworks like Gradio, Streamlit, or Docker.

What are Inference Endpoints?

Inference Endpoints are Hugging Face’s managed production deployments that let you serve a model as a scalable API.

Can I deploy models without managing servers?

Yes. Hugging Face supports serverless-style access through Inference Providers and managed hosting through Inference Endpoints.

Is Hugging Face available in my country?

Generally yes, because it’s a web-based global platform. Availability can vary based on compliance and provider infrastructure.

Can I build something similar to Hugging Face?

Yes, but building a full ecosystem is complex. You’d need a model registry, storage, repo versioning, demo hosting, deployment infrastructure, and compute billing—plus strong community features.

What makes Hugging Face different from competitors?

Its biggest differentiator is the combination of a massive community Hub plus a full lifecycle path: discover → build → demo → deploy.

How can I create a Hugging Face-like platform with Miracuves?

Miracuves can help you build an AI hub with model/dataset hosting, marketplace discovery, demo hosting modules, scalable endpoint deployment, and billing—so you can launch a Hugging Face-style platform faster.