You’ve heard the horror stories about data breaches, leaked conversations, stolen customer data, and AI apps exposing sensitive business information.

And honestly? Those fears are valid.

In 2026, a white-label ChatGPT app is not just a “cool feature.” It’s a high-risk product category because it handles:

- Personal data

- Private chat messages

- Business secrets

- Payment details (if monetized)

- AI-generated outputs that can create legal risks

The truth is: white-label does not automatically mean unsafe. But it also doesn’t mean secure.

This guide will give you:

- An honest safety assessment

- The real risks most people miss

- The security standards you must demand

- Practical steps to reduce risk

- Why Miracuves is the security-first choice for white-label AI platforms

Understanding White-Label ChatGPT App Security Landscape

What “white-label security” actually means

A white-label ChatGPT app is built by a provider and rebranded for your business. Security responsibility is shared — the provider secures the platform, and you must configure it safely.

Why people worry about white-label apps

Clients fear:

- Data leaks

- Weak authentication

- Unsecured APIs

- Inadequate vendor security practices

Current threat landscape

AI-powered apps see targeted attacks such as:

- Prompt injection

- Data scraping

- Unauthorized access

Breaches often result from misconfigurations or poor vendor security.

Security standards in 2026

Security is no longer optional: compliance frameworks like ISO 27001, SOC 2, and GDPR are baseline requirements.

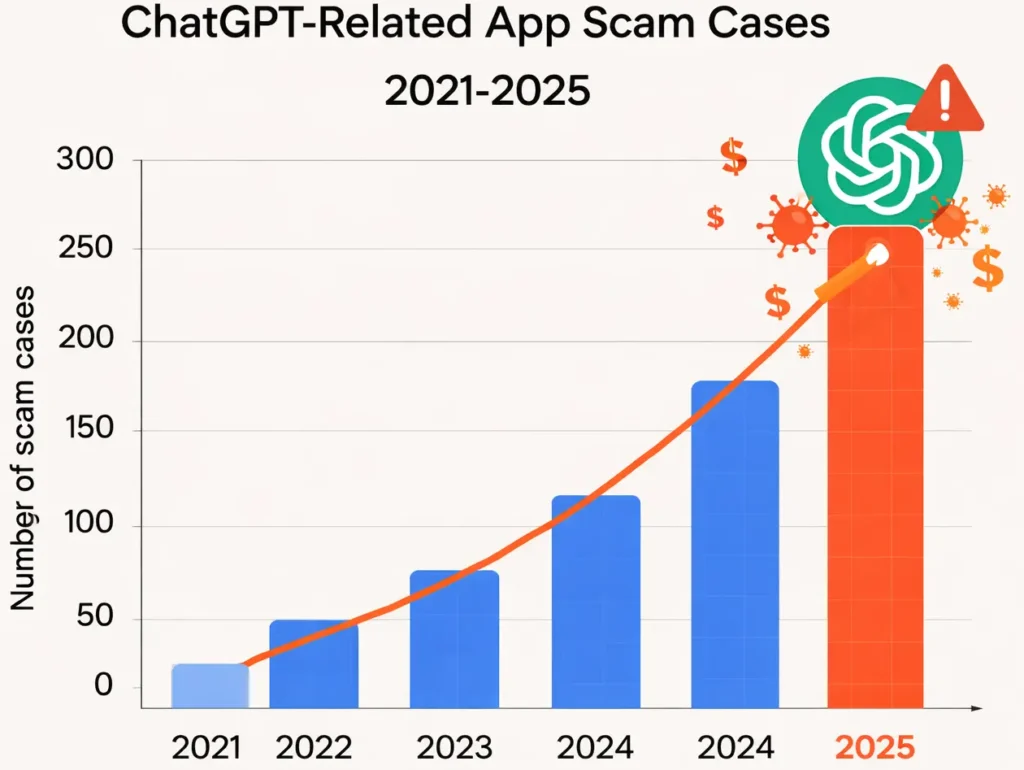

Real-world statistics

Studies show enterprise apps with weak security are:

- Far more likely to experience data loss

- More costly to remediate

Security incidents continue rising, especially around AI and data.

Key Security Risks & How to Identify Them

Data Protection & Privacy Risks

A white-label ChatGPT app typically processes highly sensitive information. The biggest privacy risks include:

User personal information

If your app stores names, emails, phone numbers, or profile details, it becomes a direct target for attackers.

Payment data security

If your app includes subscriptions or paid features, weak payment handling can expose card data and trigger major legal consequences.

Location tracking concerns

Some AI apps collect device or IP-based location data. If logged improperly, it creates serious privacy exposure.

GDPR/CCPA compliance

If your provider doesn’t support:

- Consent management

- Data deletion

- Data export

- Breach notification procedures

You may fail compliance even if your business is “small.”

Technical Vulnerabilities

Most white-label AI app breaches don’t happen through “AI magic.” They happen through normal software weaknesses.

Code quality issues

Poor coding practices lead to:

- SQL injection

- Broken authentication

- Data leaks in logs

Server security gaps

Common issues:

- Misconfigured cloud storage

- Open admin panels

- Weak firewall rules

API vulnerabilities

APIs are the #1 attack surface for white-label apps, especially when:

- Rate limiting is missing

- Tokens don’t expire

- Access control is broken

Third-party integrations

Many white-label ChatGPT apps integrate:

- Payment gateways

- Analytics tools

- Email providers

- Push notification services

Each integration increases risk if not secured properly.

Business Risks

Security issues don’t just cause “technical problems.” They create business damage.

Legal liability

If customer data leaks, you may be legally responsible even if the provider caused it.

Reputation damage

For AI apps, trust is everything. One incident can permanently kill adoption.

Financial losses

Breaches lead to:

- Refunds

- Downtime

- Legal costs

- Incident response costs

Regulatory penalties

Non-compliance can result in heavy fines under GDPR, CCPA, and other privacy laws.

Risk Assessment Checklist

Use this checklist before choosing any white-label ChatGPT app provider:

- Is chat data encrypted in transit and at rest?

- Are admin panels protected with 2FA?

- Are API endpoints rate-limited and secured?

- Is user data stored with proper access controls?

- Are logs sanitized (no sensitive chat content)?

Key Security Risks & How to Identify Them

Data Protection & Privacy Risks

A white-label ChatGPT app handles extremely sensitive data, often without users realizing it.

User personal information

If your app collects names, emails, phone numbers, or profile details, that becomes regulated personal data under GDPR/CCPA.

Payment data security

If your app has subscriptions, in-app purchases, or payment gateways, your risk level jumps immediately. Payment data must follow PCI DSS rules.

Location tracking concerns

Some AI apps integrate location for personalization, language, or regional services. If location is stored improperly, it becomes a major compliance and reputation risk.

GDPR/CCPA compliance

The biggest mistake: thinking compliance is “just a privacy policy.”

In reality, compliance requires:

- lawful data processing

- consent management

- deletion/export workflows

- breach reporting readiness

Technical Vulnerabilities

Most white-label AI app breaches happen because of technical shortcuts.

Code quality issues

If the provider ships low-quality code, it often contains:

- insecure storage

- weak input validation

- hardcoded keys

- poor session handling

Server security gaps

If servers are not hardened, attackers can exploit:

- exposed admin panels

- weak firewall rules

- unpatched OS vulnerabilities

API vulnerabilities

AI apps depend heavily on APIs, and insecure APIs can lead to:

- data scraping

- unauthorized access

- token hijacking

- rate-limit bypass

Third-party integrations

White-label ChatGPT apps usually connect with:

- analytics tools

- payment providers

- CRM systems

- push notification services

Every integration expands the attack surface.

Business Risks

Even if the issue starts as “technical,” the damage becomes business-critical.

Legal liability

If user data leaks, your company is the one users will blame — not the white-label vendor.

Reputation damage

AI apps rely on trust. A single breach can permanently destroy adoption and retention.

Financial losses

Costs typically include:

- incident response

- legal counsel

- user compensation

- downtime

- customer churn

Regulatory penalties

Non-compliance can lead to penalties under GDPR, CCPA, and regional privacy laws.

Risk Assessment Checklist

Use this quick checklist before choosing any white-label ChatGPT app provider:

- Is data encrypted at rest and in transit?

- Are APIs protected with OAuth + rate limiting?

- Is user chat data isolated per tenant/business?

- Are logs monitored for suspicious activity?

- Are admin panels protected with 2FA?

- Is there a written incident response plan?

- Is there a clear security update policy?

- Can users request data deletion/export?

- Does the provider support GDPR/CCPA compliance?

- Is payment processing PCI DSS compliant (if applicable)?

Security Standards Your White-Label ChatGPT App Must Meet

Essential Certifications

If your white-label ChatGPT app is meant for real users and real businesses, these certifications are not “extra.” They are baseline trust signals in 2026.

ISO 27001 compliance

ISO 27001 proves the provider follows a structured Information Security Management System (ISMS).

It matters because it shows security is managed as a process, not just “tools.”

SOC 2 Type II

SOC 2 Type II is one of the strongest vendor trust indicators because it evaluates security controls over time (not just one audit day).

This is especially important for AI apps that handle user conversations and business data.

GDPR compliance

If your users include EU residents, GDPR compliance is mandatory.

It affects:

- consent

- data retention

- user rights

- breach reporting

HIPAA (if applicable)

If your ChatGPT app is used in healthcare, wellness, patient support, or medical record workflows, HIPAA can become relevant.

Even if you are not a hospital, you may still handle protected health information.

PCI DSS for payments

If your app accepts:

- subscriptions

- credit cards

- in-app purchases

You must ensure PCI DSS compliant payment processing.

Technical Requirements

These are the technical controls your white-label ChatGPT app must have in 2026.

End-to-end encryption

At minimum, your app must support:

- TLS encryption for all traffic

- encrypted storage for chats and user data

True end-to-end encryption is rare in AI apps, but strong encryption in transit and at rest is non-negotiable.

Secure authentication (2FA/OAuth)

Your app must support:

- strong password policies

- 2FA for admin accounts

- OAuth login options where relevant

- session expiry and token rotation

Regular security audits

Audits should include:

- infrastructure review

- application security review

- cloud configuration review

Penetration testing

Pen testing should be done:

- before launch

- after major updates

- at least annually

SSL certificates

Every environment must use SSL:

- production

- staging

- admin panel

- APIs

Secure API design

Your API must include:

- authentication for every endpoint

- strict role-based access control

- rate limiting

- request validation

- logging and monitoring

Security Standards Comparison Table

| Standard / Requirement | What It Proves | Required For |

|---|---|---|

| ISO 27001 | Security management system is in place | Enterprise trust |

| SOC 2 Type II | Security controls tested over time | B2B and SaaS apps |

| GDPR | Legal compliance for EU users | EU market |

| HIPAA | Protected health data compliance | Healthcare apps |

| PCI DSS | Secure payment handling | Subscription/payment apps |

| Penetration Testing | Real-world attack simulation | All serious apps |

| Secure API Design | Prevents data leaks & scraping | AI apps especially |

Red Flags: How to Spot Unsafe White-Label Providers

Warning Signs

Most unsafe white-label ChatGPT app providers don’t look unsafe at first. They look “fast,” “cheap,” and “ready to launch.”

Here are the biggest red flags you should never ignore:

No security documentation

If the provider cannot share security policies, audit reports, or architecture notes, that’s a serious warning.

Cheap pricing without explanation

Low pricing often means:

- weak infrastructure

- no audits

- no monitoring

- outdated code

Security is expensive. If the pricing is unbelievably low, security is usually missing.

No compliance certifications

A provider selling AI apps in 2026 without even basic compliance readiness is not enterprise-safe.

Outdated technology stack

Old frameworks, unsupported libraries, and outdated server configurations are common in risky providers.

Poor code quality

If the app feels buggy, slow, or inconsistent, it’s usually a sign of deeper issues in security and architecture.

No security updates policy

Security is not a one-time setup. If they cannot explain how updates and patches work, you are exposed long-term.

Lack of data backup systems

No backups means:

- one ransomware incident can destroy everything

- recovery time becomes unpredictable

- business continuity fails

No insurance coverage

Serious providers often have cybersecurity or professional liability insurance. If they have none, your risk increases.

Evaluation Checklist

Before choosing a white-label ChatGPT app provider, use this checklist.

Questions to ask providers

- Do you follow ISO 27001 or SOC 2 practices?

- How do you encrypt chat data at rest and in transit?

- How do you prevent unauthorized admin access?

- How often do you run penetration tests?

- Do you provide security patch updates regularly?

- What is your incident response process?

- Where is user data stored (region/country)?

Documents to request

- security policy overview

- compliance readiness statement (GDPR/CCPA)

- penetration test summary (even a sanitized one)

- infrastructure security diagram

- data retention and deletion policy

Testing procedures

Even if you’re not technical, insist on:

- a staging demo environment

- API documentation review

- admin panel security review

- authentication and role testing

Due diligence steps

- check the provider’s past projects

- ask for security case studies

- verify update and maintenance commitments

- confirm who owns the data legally

Read more : – Top ChatGPT Features Every Startup Should Know

Pre-Launch Security

Best Practices for Secure White-Label ChatGPT App Implementation

A white-label ChatGPT app can be secure — but only if security is treated as a launch requirement, not a future upgrade.

Security audit process

Before launch, the provider should perform:

- application security review

- infrastructure security review

- database and storage review

- access control review

If a provider skips this, you are launching blind.

Code review requirements

A secure provider must review:

- authentication logic

- API permissions

- file upload handling (if included)

- admin panel security

- rate limiting and abuse controls

Infrastructure hardening

Infrastructure must include:

- firewall rules

- private database access (not public)

- secure cloud IAM roles

- DDoS protection

- encrypted backups

Compliance verification

Before launch, confirm:

- GDPR consent flows

- privacy policy readiness

- data deletion and export processes

- regional data storage requirements

Staff training programs

Even the best tech fails if your team is careless. Basic training should cover:

- phishing awareness

- admin account security

- secure data handling

Post-Launch Monitoring

Security is a continuous job. AI apps are attacked constantly, especially once they gain users.

Continuous security monitoring

Your app should have:

- login monitoring

- unusual API activity alerts

- suspicious traffic detection

- admin access logging

Regular updates and patches

A secure provider must commit to:

- monthly security updates

- emergency patching for critical issues

- dependency updates for libraries

Incident response planning

You need a plan for:

- containment

- investigation

- customer notification

- legal reporting

- recovery

User data management

Post-launch, you must manage:

- data retention policies

- user deletion requests

- chat history storage limits

- access permissions

Backup and recovery systems

Your provider must support:

- daily encrypted backups

- disaster recovery process

- recovery time targets (RTO)

- recovery point targets (RPO)

Security Implementation Timeline

Here’s a realistic security timeline for a white-label ChatGPT app:

- Week 1: Security architecture + compliance planning

- Week 2: Code review + authentication hardening

- Week 3: API security + infrastructure hardening

- Week 4: Pen testing + final fixes

- Launch: Monitoring + incident response readiness

- Ongoing: Monthly updates + quarterly security reviews

Legal & Compliance Considerations

Regulatory Requirements

A white-label ChatGPT app is not just “software.” In many regions, it is legally treated as a data processing platform.

That means you must plan compliance before launch.

Data protection laws by region

Key compliance laws you must consider in 2026 include:

- GDPR (European Union)

- UK GDPR (United Kingdom)

- CCPA/CPRA (California, USA)

- LGPD (Brazil)

- PDPA (Singapore, Thailand, and others)

- POPIA (South Africa)

- PIPEDA (Canada)

Even if you are not based in these regions, you may still be required to comply if your users live there.

Industry-specific regulations

Depending on your use case, your ChatGPT app may fall under:

- healthcare regulations (HIPAA)

- finance and fintech compliance

- education privacy laws

- children’s privacy (COPPA, GDPR-K)

User consent management

Your app must support:

- cookie and tracking consent (if applicable)

- clear data collection consent

- AI disclaimer and consent

- opt-out mechanisms

Privacy policy requirements

Your privacy policy must clearly explain:

- what data is collected

- why it is collected

- where it is stored

- how long it is stored

- how users can delete/export it

Terms of service essentials

Your ToS should include:

- AI output limitations

- user responsibility clauses

- prohibited content rules

- dispute and liability sections

Liability Protection

Security is not only about preventing breaches — it’s also about surviving them legally.

Insurance requirements

Businesses running AI apps should consider:

- cyber liability insurance

- professional liability insurance

- errors and omissions coverage

Legal disclaimers

AI apps must include disclaimers about:

- accuracy limitations

- non-medical / non-legal advice

- content generation risks

User agreements

Your onboarding must include:

- explicit acceptance of privacy policy

- consent for AI processing

- optional consent for analytics

Incident reporting protocols

A serious provider will have:

- breach reporting process

- timelines for notification

- internal escalation steps

- documentation readiness

Regulatory compliance monitoring

Compliance is not “set and forget.” Laws change often, and AI regulation is expanding rapidly.

Legal & Compliance Considerations

Compliance Checklist by Region

| Region | Key Laws | What You Must Ensure |

|---|---|---|

| European Union | GDPR | Consent, deletion, export, breach reporting, DPA with vendors |

| United Kingdom | UK GDPR | Same as GDPR + UK-specific reporting expectations |

| USA (California) | CCPA / CPRA | Data access, opt-out, deletion, disclosure of selling/sharing |

| Canada | PIPEDA | Transparency, consent, secure storage, breach reporting |

| Brazil | LGPD | Legal basis, user rights, retention control |

| Singapore | PDPA | Consent, purpose limitation, access controls |

| South Africa | POPIA | Data protection safeguards, breach notification |

| Global | Industry regulations | HIPAA, PCI DSS, COPPA, finance rules (depending on use case) |

Read more : – How to Hire the Best ChatGPT Clone Developer

Why Miracuves White-Label ChatGPT App is Your Safest Choice

If you’re building a white-label ChatGPT app in 2026, your biggest risk is not “AI performance.”

Your biggest risk is launching something that users don’t trust.

Miracuves is positioned as a security-first provider because the platform is designed with safety, compliance, and long-term risk reduction built in.

Miracuves Security Advantages

Enterprise-grade security architecture

Miracuves white-label ChatGPT app solutions are built with security controls that match modern enterprise expectations, not startup shortcuts.

Regular security audits and certifications

Miracuves focuses on structured security practices and audit-ready development, so your app can meet real compliance requirements.

GDPR/CCPA compliant by default

Instead of treating compliance as an “add-on,” Miracuves builds privacy-first features into the core system.

24/7 security monitoring

Continuous monitoring helps detect:

- suspicious login attempts

- unusual API usage

- abuse patterns

- potential scraping attacks

Encrypted data transmission

Miracuves ensures encrypted communication and secure handling of user chat data.

Secure payment processing

If your app includes subscriptions, Miracuves supports secure payment integration aligned with PCI DSS expectations.

Regular security updates

Security threats evolve constantly. Miracuves supports ongoing patching, dependency updates, and platform hardening.

Insurance coverage included

A major trust signal: Miracuves includes insurance coverage, reducing business risk and strengthening liability protection.

Final Thought

Don’t compromise on security. Miracuves white-label ChatGPT app solutions come with enterprise-grade security built-in. Our 600+ successful projects have maintained zero major security breaches. Get a free security assessment and see why businesses trust Miracuves for safe, compliant platforms.

A white-label ChatGPT app can absolutely be safe in 2026 — but only if security is treated as the foundation, not a feature.

If you want to launch fast without gambling with user trust, Miracuves is the security-first choice.

FAQs

1. How secure is white-label vs custom development?

White-label can be just as secure if the provider follows strong standards. Custom is only safer if built by a security-mature team.

2. What happens if there’s a security breach?

You must contain it, investigate, notify users/regulators (if required), and patch the issue. A good provider supports incident response.

3. Who is responsible for security updates?

Usually the provider handles core platform updates, while you handle app-level policies and admin access. Miracuves supports both.

4. How is user data protected in white-label apps?

Through encryption, access controls, secure APIs, and strict data storage policies.

5. What compliance certifications should I look for?

ISO 27001, SOC 2 Type II, GDPR readiness, and PCI DSS for payments. HIPAA if healthcare-related.

6. Can white-label apps meet enterprise security standards?

Yes, if the provider offers audits, monitoring, secure architecture, and compliance support.

7. How often should security audits be conducted?

At minimum: yearly. Ideally: quarterly reviews plus penetration testing after major updates.

8. What’s included in Miracuves security package?

Encrypted data handling, compliance-ready architecture, monitoring, secure payments, regular updates, and risk-reduction support.

9. How to handle security in different countries?

Use region-based compliance planning, local data storage rules where needed, and privacy policies tailored per region.

10. What insurance is needed for app security?

Cyber liability insurance is the most common. Many businesses also add professional liability coverage.

Related Articles