You’ve heard the horror stories about data breaches, leaked user conversations, and AI apps quietly collecting more data than they should.

Now imagine this happening inside an Apple AI Realm-style app — where users may share voice, personal preferences, messages, media, and even private context. In 2026, white-label apps aren’t automatically unsafe, but they can become risky fast if security is treated like an “add-on.”

In this guide, I’ll give you an honest, security-first breakdown of what makes a white-label Apple AI Realm app safe, what makes it dangerous, and exactly how to assess a provider before you invest.

Understanding White-Label Apple AI Realm App Security Landscape

What White-Label App Security Really Means

White-label app security refers to how securely the core app architecture is built, maintained, and updated by the provider — not just how it looks on the surface. For an Apple AI Realm-style app, this includes AI data handling, cloud infrastructure, APIs, and user privacy controls.

Common Security Myths vs Reality

Many believe white-label apps are “less secure by default.” In reality, risk comes from poor implementation, outdated code, and missing compliance — not the white-label model itself.

Why People Worry About White-Label AI Apps

Apple AI Realm-style apps often process sensitive data like voice inputs, behavioral context, and personal preferences. This makes users naturally cautious about how their data is stored and used.

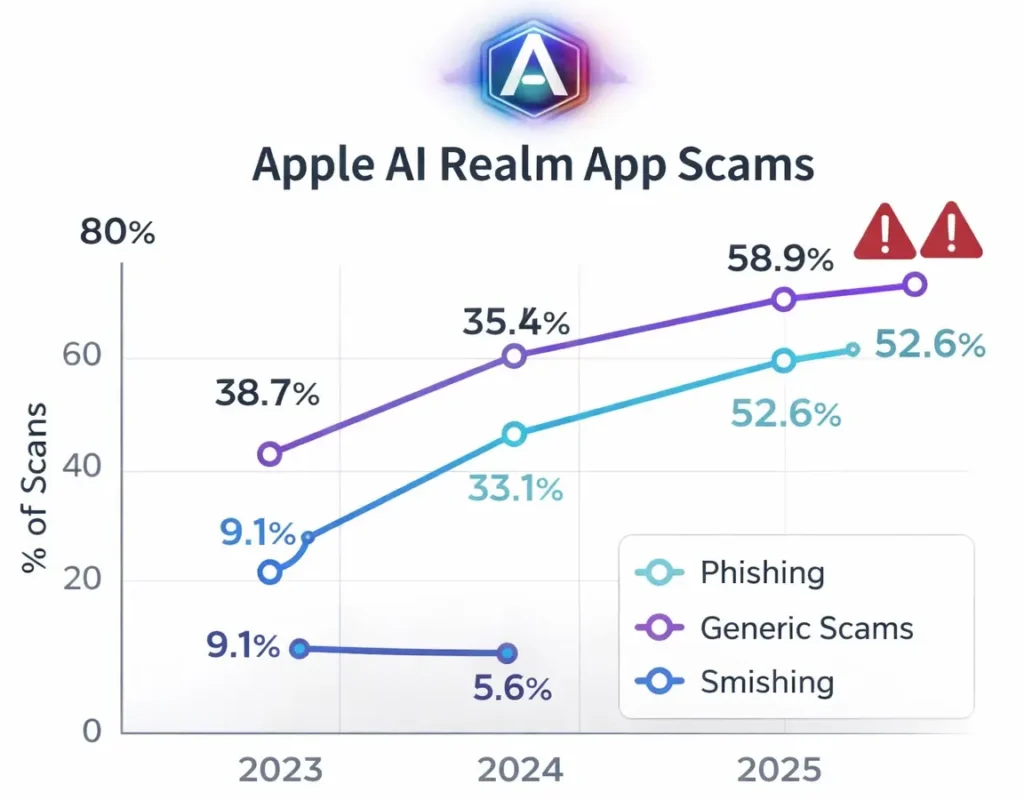

Current Threat Landscape for AI-Driven Apps

In 2026, AI-powered apps face increased risks from:

- API abuse and model extraction

- Unauthorized data access

- AI prompt injection attacks

- Cloud misconfiguration

Security Standards in 2026

Modern white-label AI apps are expected to follow zero-trust architecture, encrypted AI pipelines, and strict access control policies.

Real-World Security Statistics

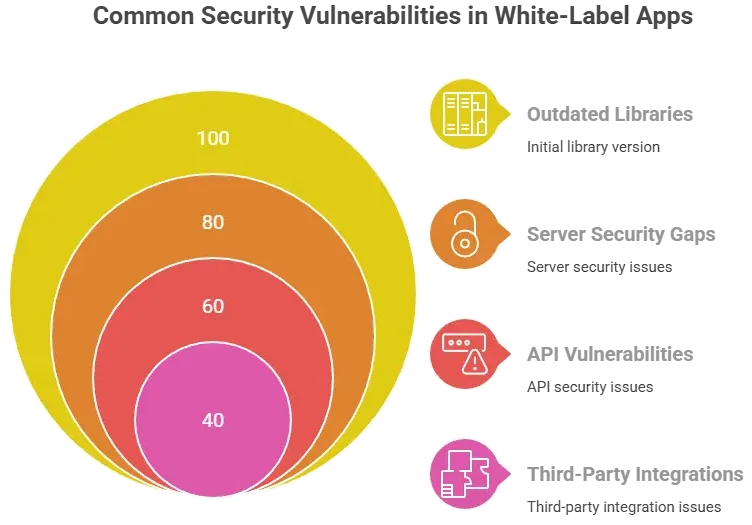

Recent industry reports show that over 60% of AI app breaches are caused by misconfigured APIs and poor access controls — not AI models themselves.

Key Security Risks & How to Identify Them

Data Protection & Privacy Risks

User Personal Information

Apple AI Realm-style apps often store names, preferences, chat history, and voice inputs. Weak encryption or improper access control can expose this data.

Payment Data Security

If the app includes subscriptions or in-app purchases, insecure payment handling can lead to PCI DSS violations and financial fraud.

Location & Context Tracking

AI context awareness increases risk when location or behavioral data is stored without strict minimization and consent controls.

GDPR and CCPA Compliance

Missing consent logs, unclear data retention policies, or no data deletion mechanism are major compliance red flags.

Business-Level Risks

Legal Liability

Data breaches can trigger lawsuits, regulatory audits, and forced shutdowns.

Reputation Damage

AI apps lose trust quickly after a single privacy incident.

Financial Losses

Fines, downtime, and recovery costs often exceed development costs.

Regulatory Penalties

Non-compliance with global data laws can lead to multi-million-dollar penalties.

Risk Assessment Checklist

- Is all user and AI data encrypted at rest and in transit?

- Are APIs protected with OAuth and rate limits?

- Is AI input and output logged securely?

- Are compliance audits documented?

- Is incident response clearly defined?

Security Standards Your White-Label Apple AI Realm App Must Meet

Essential Certifications

ISO 27001 Compliance

This proves the provider follows a structured information security management system (ISMS). It’s one of the strongest “trust signals” in white-label app security.

SOC 2 Type II

SOC 2 Type II validates that security controls are not only designed correctly, but also consistently followed over time.

GDPR Compliance

Required if you serve EU users. For an Apple AI Realm-style app, GDPR matters heavily because AI data often includes personal identifiers and behavioral context.

HIPAA (If Applicable)

If your app touches health-related conversations, wellness coaching, therapy-like prompts, or medical tracking, HIPAA may apply in the US.

PCI DSS for Payments

If your app accepts payments (subscriptions, purchases, upgrades), PCI DSS compliance is mandatory.

Technical Security Requirements

End-to-End Encryption

At minimum:

- Encryption in transit (TLS 1.2+ / TLS 1.3)

- Encryption at rest (AES-256)

For AI apps, encryption must cover both chat data and AI context storage.

Secure Authentication (2FA / OAuth)

Secure Apple AI Realm-style apps must support:

- OAuth 2.0

- Multi-factor authentication (2FA)

- Secure session management

Regular Security Audits

Audits should happen at least quarterly, with documentation shared on request.

Penetration Testing

A provider must perform pen testing on:

- APIs

- Admin panels

- Cloud storage

- Authentication flows

SSL Certificates

Basic requirement, but also ensure:

- Auto-renewal

- No expired certificates

- HSTS enabled

Secure API Design

For AI-driven apps, API security is critical:

- Token-based authentication

- Rate limiting

- Input validation

- Zero-trust internal access

Security Standards Comparison Table

| Standard / Control | Why It Matters for Apple AI Realm-Style Apps | Required Level in 2026 |

|---|---|---|

| ISO 27001 | Full security governance | Strongly recommended |

| SOC 2 Type II | Proves security is practiced, not promised | Expected for serious providers |

| GDPR | AI + personal data = high risk | Mandatory for EU users |

| CCPA/CPRA | Protects US user rights | Mandatory for California users |

| PCI DSS | Payment data protection | Mandatory if payments exist |

| Pen Testing | Finds real exploitable weaknesses | Required quarterly |

| Encryption | Prevents data leaks from storage and transit | Mandatory baseline |

Red Flags: How to Spot Unsafe White-Label Providers

Warning Signs You Should Take Seriously

No Security Documentation

If a provider cannot share even basic security policies, architecture notes, or audit summaries, it usually means security is not a real priority.

Cheap Pricing Without Explanation

Low-cost white-label Apple AI Realm-style apps often cut corners in:

- encryption

- infrastructure

- monitoring

- updates

This is where breaches are born.

No Compliance Certifications

A provider may say “we follow best practices,” but if they have no ISO 27001, SOC 2, or compliance-ready documentation, you are taking a blind risk.

Outdated Technology Stack

Old frameworks, outdated libraries, and unpatched servers are common entry points for attackers.

Poor Code Quality

If the app is slow, buggy, or unstable, that often signals deeper engineering issues — including security.

No Security Updates Policy

Security is not a one-time job. If the provider doesn’t have a patching schedule, you will eventually run a vulnerable system.

No Backup and Recovery Systems

A secure app must survive:

- ransomware

- accidental deletion

- system failure

No Insurance Coverage

Serious providers usually carry cyber liability coverage. If they don’t, it’s often because they’re not built for enterprise-level risk.

Evaluation Checklist: What to Ask Providers

Questions to Ask

- Do you provide SOC 2 Type II or ISO 27001 proof?

- How often do you run penetration testing?

- What is your incident response process?

- How do you store and encrypt AI conversation data?

- Do you support GDPR deletion requests and consent logs?

Documents to Request

- Security overview / architecture

- Data processing agreement (DPA)

- Pen test summary reports

- Compliance statement (GDPR/CCPA/PCI)

- SLA and uptime + incident handling

Testing Procedures to Confirm

- API vulnerability testing

- Authentication security review

- Admin panel access control testing

- Cloud storage permission review

Due Diligence Steps

- Run a third-party security audit before launch

- Validate infrastructure setup (not just UI)

- Confirm update policy in writing

Best Practices for Secure White-Label Apple AI Realm App Implementation

Pre-Launch Security Practices

Security Audit Process

Before launch, a full security audit should cover infrastructure, APIs, AI data flows, and admin access. This helps catch critical issues early.

Code Review Requirements

Independent code reviews ensure there are no hardcoded keys, insecure logic, or outdated dependencies hidden in the app.

Infrastructure Hardening

Secure cloud setup should include:

- Private networks

- Firewall rules

- Role-based access control

- Isolated AI processing environments

Compliance Verification

Confirm GDPR, CCPA, and payment compliance before onboarding users. Fixing compliance issues after launch is costly and risky.

Staff Training Programs

Even the best systems fail due to human error. Teams should be trained on secure access, data handling, and incident reporting.

Post-Launch Monitoring & Protection

Continuous Security Monitoring

Real-time monitoring helps detect:

- Unauthorized access

- API abuse

- AI prompt injection attempts

Regular Updates and Patches

Security updates should be released on a fixed schedule, not only when problems appear.

Incident Response Planning

A clear plan must exist for:

- breach detection

- user notification

- regulatory reporting

- damage containment

User Data Management

AI conversation logs and context data should follow strict retention and deletion rules.

Backup and Recovery Systems

Encrypted backups with tested recovery processes protect against data loss and ransomware.

Security Implementation Timeline

| Phase | Security Focus |

|---|---|

| Planning | Risk assessment & compliance mapping |

| Development | Secure coding & access control |

| Pre-Launch | Audit, pen testing, compliance checks |

| Launch | Monitoring & logging |

| Post-Launch | Updates, audits, incident drills |

Legal & Compliance Considerations

Regulatory Requirements

Data Protection Laws by Region

A white-label Apple AI Realm-style app may need to comply with:

- GDPR (EU)

- UK GDPR (United Kingdom)

- CCPA/CPRA (California)

- PIPEDA (Canada)

- DPDP Act (India)

- LGPD (Brazil)

Industry-Specific Regulations

If your app touches sensitive areas like health, finance, or children:

- HIPAA (US health data)

- PCI DSS (payments)

- COPPA (children’s privacy in the US)

User Consent Management

AI apps must clearly collect consent for:

- data collection

- personalization

- analytics tracking

- location/context features

Privacy Policy Requirements

Your privacy policy must clearly explain:

- what data is collected

- why it’s collected

- how long it’s stored

- how users can delete it

Terms of Service Essentials

Terms should cover:

- acceptable use

- AI limitations and disclaimers

- liability boundaries

- dispute resolution

Liability Protection

Insurance Requirements

For AI-driven apps, cyber liability insurance is increasingly expected in 2026.

Legal Disclaimers

You must clarify that AI outputs are not guaranteed to be correct and should not be treated as professional advice.

User Agreements

Strong agreements protect you if users misuse AI features or upload sensitive information.

Incident Reporting Protocols

Many laws require notifying:

- regulators

- affected users

within strict timelines after a breach.

Regulatory Compliance Monitoring

Compliance is ongoing. A secure provider must support continuous monitoring, not just “launch-ready compliance.”

Compliance Checklist by Region

| Region | Key Laws | What You Must Have |

|---|---|---|

| EU | GDPR | Consent logs, deletion rights, DPA |

| UK | UK GDPR | Similar to GDPR + UK reporting |

| US (CA) | CPRA | Opt-out, deletion, data disclosure |

| Canada | PIPEDA | Transparency + user control |

| India | DPDP Act | Consent + breach reporting |

| Brazil | LGPD | Legal basis + data minimization |

Why Miracuves White-Label Apple AI Realm App is Your Safest Choice

Miracuves Security-First Approach

Miracuves builds white-label apps with security embedded into the architecture, not added later. For Apple AI Realm-style platforms, this means AI data protection, privacy compliance, and infrastructure hardening are part of the core system.

Enterprise-Grade Security Architecture

Miracuves uses layered security models with strict access controls, isolated environments, and encrypted AI data pipelines.

Compliance Ready by Default

Every Miracuves white-label Apple AI Realm app is built to support:

- GDPR and CCPA requirements

- Secure consent management

- Data deletion and retention controls

24/7 Security Monitoring

Continuous monitoring helps identify suspicious behavior, API abuse, and unauthorized access in real time.

Encrypted Data Transmission

All user data, AI conversations, and contextual inputs are encrypted both in transit and at rest.

Secure Payment Processing

Miracuves integrates PCI DSS-compliant payment systems for subscriptions and in-app purchases.

Regular Security Updates

Security patches and dependency updates are applied continuously to reduce exposure to emerging threats.

Insurance Coverage Included

Miracuves-supported platforms include cyber risk mitigation planning and insurance readiness.

Final Thought

Don’t compromise on security. Miracuves white-label Apple AI Realm app solutions come with enterprise-grade security built in. With 600+ successful projects and zero major security breaches, Miracuves helps businesses launch safe, compliant AI platforms. Get a free security assessment and see why security-focused teams trust Miracuves.

White-label Apple AI Realm apps can be safe in 2026 — but only when security, compliance, and accountability are treated as core business priorities. Choosing the right provider is the difference between building user trust and managing constant risk.

FAQs

1. How secure is white-label vs custom development?

White-label can be equally secure if the provider follows SOC 2, ISO 27001, and regular penetration testing. Custom is only safer if built by a strong security team.

2. What happens if there’s a security breach?

You may face user trust loss, legal reporting requirements, and financial penalties. A secure provider should have an incident response plan ready.

3. Who is responsible for security updates?

In most cases, the provider handles core app updates, while you handle business-side policies. Miracuves supports ongoing security patching as part of the package.

4. How is user data protected in white-label apps?

Through encryption, strict access controls, secure APIs, and privacy-based data retention rules.

5. What compliance certifications should I look for?

SOC 2 Type II, ISO 27001, GDPR readiness, and PCI DSS if payments exist.

6. Can white-label apps meet enterprise security standards?

Yes, if they are built with enterprise architecture, auditing, monitoring, and compliance support.

7. How often should security audits be conducted?

At minimum quarterly, plus annual full audits and regular vulnerability scanning.

8. What’s included in Miracuves security package?

Secure architecture, encryption, compliance support, monitoring readiness, regular updates, and security-first infrastructure planning.

9. How to handle security in different countries?

Use region-based compliance mapping, strong consent systems, and flexible data retention and deletion controls.

10. What insurance is needed for app security?

Cyber liability insurance is recommended, plus vendor coverage and breach response support depending on your region.

Related Articles