Imagine you’re making a promo video, a short film scene, or a social ad—and you need a cinematic shot that would normally require a camera crew, actors, locations, and a day of production. Instead, you type a prompt, generate a video clip, then edit it like a normal timeline project. That “from idea to footage” shortcut is exactly what Runway ML is built for.

Runway ML is an AI-powered creative platform focused on video generation and video editing. It’s known for its generative video models (like Gen-3 Alpha and newer generations such as Gen-4 and Gen-4.5) and a toolkit that helps creators generate clips from text or images, refine scenes, and stitch outputs into real projects.

Runway’s big impact is making high-end visual creation more accessible: solo creators, marketing teams, and studios can prototype scenes, create variations, and iterate fast—without traditional production costs. Newer releases have emphasized better realism, prompt adherence, and consistency across scenes and characters.

By the end of this guide, you’ll understand what Runway ML is, how it works step by step, how it makes money, the features behind its popularity, the underlying tech (in simple terms), and why many founders want to build Runway-like AI video platforms—and how Miracuves can help you launch one quickly.

What Is Runway ML? The Simple Explanation

Runway ML is an AI-powered video creation and editing platform that helps creators generate video clips, transform footage, and edit content using artificial intelligence. In simple terms, it lets you create and modify videos using text prompts, images, and AI tools—without needing complex production setups.

The Core Problem Runway ML Solves

Traditional video production is expensive, time-consuming, and resource-heavy. Runway ML reduces these barriers by:

- Turning text or images into video clips

- Automating complex visual effects and edits

- Reducing dependency on large crews and equipment

- Speeding up experimentation and iteration

It makes video creation faster, cheaper, and more accessible.

Target Users and Use Cases

Runway ML is commonly used by:

• Content creators and YouTubers

• Filmmakers and video artists

• Marketing and social media teams

• Designers creating motion content

• Agencies prototyping video concepts

Typical use cases include AI-generated scenes, background removal, video style transfer, short-form content, ads, and experimental filmmaking.

Current Market Position

Runway ML is positioned as a leader in AI video generation. It sits between experimental AI research tools and practical creator software, offering both cutting-edge models and usable editing workflows.

Why It Became Successful

Runway ML became popular because it focused on real creative output, not just demos. By combining AI generation with timeline-based editing, it lets creators actually finish projects instead of stopping at experiments.

How Runway ML Works — Step-by-Step Breakdown

For Creators (Filmmakers, Marketers, Designers)

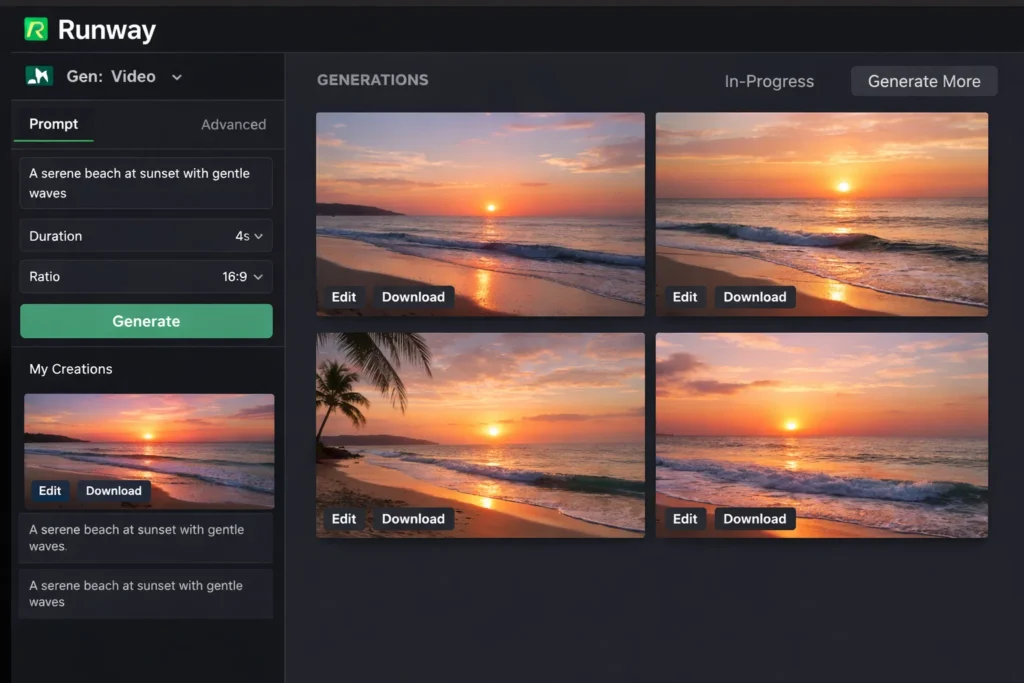

Getting started

Users begin inside Runway’s web-based studio. Instead of opening multiple tools, everything—generation, editing, and exporting—happens in one place. You can start from text, an image, or existing video footage.

Creating video with text or images

Runway allows creators to:

- Type a text prompt to generate a short video clip

- Upload an image and animate it into motion

- Extend or reimagine an existing scene using AI

The AI interprets movement, lighting, camera motion, and scene continuity to generate usable footage.

Refining and iterating

After a clip is generated, creators can:

- Regenerate variations of the same idea

- Adjust prompts for better motion or realism

- Extend clips to make scenes longer

- Combine multiple clips into a sequence

This makes it easy to explore creative directions without reshooting.

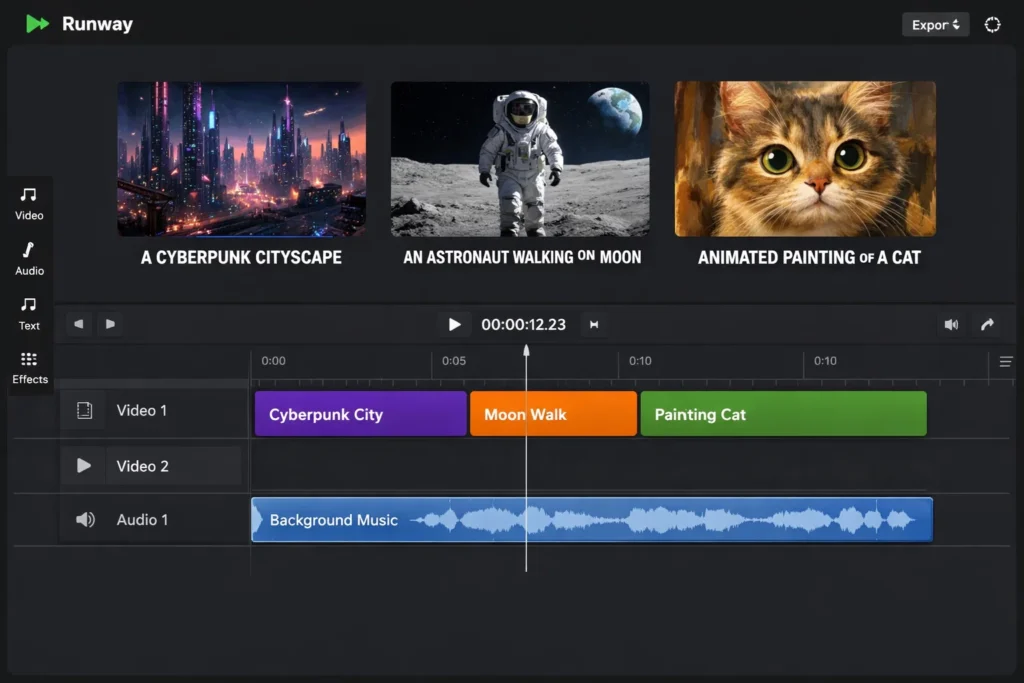

Editing inside Runway

Runway includes built-in editing tools such as:

- Timeline-based video editing

- Background removal and object isolation

- Style and color adjustments

- Clip trimming and sequencing

Generated clips behave like normal video files that can be edited and arranged.

Typical creator workflow

Idea or script → text/image prompt → AI-generated clip → refinement → timeline editing → export final video.

For Teams and Agencies

Fast prototyping and iterations

Teams use Runway to quickly create multiple versions of ads, social clips, or scenes, test creative directions, and finalize the best option.

Reduced production overhead

Because scenes can be generated or modified digitally, teams save time on locations, reshoots, and post-production fixes.

Technical Overview (Simple)

Runway ML combines:

- Video generation models (text-to-video, image-to-video)

- Motion and scene understanding systems

- Editing and compositing tools

- Cloud-based rendering and processing

The platform is designed to take creators from idea → footage → finished video without leaving the ecosystem.

Read More :- How to Develop an AI Chatbot Platform

Runway ML’s Business Model Explained

How Runway ML Makes Money

Runway ML operates on a subscription-based SaaS model designed for creators, teams, and studios. Instead of ads, revenue is tied directly to usage of its AI video tools and compute-intensive generation features.

Primary revenue streams include:

- Subscription plans: Monthly plans that unlock access to AI video generation, editing tools, and higher usage limits

- Usage-based credits: Advanced video generation (text-to-video, image-to-video) consumes credits tied to compute usage

- Team plans: Collaboration features, shared workspaces, and higher limits for agencies and studios

- Enterprise offerings: Custom plans for larger organizations with higher throughput and support needs

This model scales naturally with how much video creators produce.

Pricing Structure

Runway ML pricing typically depends on:

- Subscription tier (individual, creator, team)

- Monthly credit allocation for AI video generation

- Resolution, duration, and quality of generated clips

- Priority access to newer or more advanced models

Lower tiers support experimentation, while higher tiers are built for production workloads.

Fee Breakdown

- Monthly or annual subscription fee

- Credits consumed per AI-generated or extended clip

- No advertising or commission-based pricing

- Higher tiers unlock faster processing and more generation capacity

This keeps costs predictable while aligning price with creative output.

Market Size and Demand

Demand for Runway-style platforms is driven by:

- Explosion of short-form video across social platforms

- Marketing teams needing rapid video variations

- Creators seeking cinematic visuals without production crews

- Agencies under pressure to deliver more content, faster

- Growing acceptance of AI-assisted filmmaking

AI video generation is becoming a core capability, not a novelty.

Profitability Insights

Runway ML improves profitability by:

- Encouraging recurring subscriptions

- Monetizing compute-heavy features through credits

- Retaining users with integrated creation + editing workflows

- Constantly releasing improved models that drive upgrades

Revenue Model Breakdown

| Revenue Stream | Description | Who Pays | Nature |

|---|---|---|---|

| Subscriptions | Access to tools & limits | Creators/Teams | Recurring |

| AI Credits | Video generation usage | Power users | Usage-based |

| Team Plans | Shared workspaces | Agencies/Studios | Tiered |

| Enterprise Deals | High-volume usage | Businesses | Contract |

Key Features That Make Runway ML Successful

1) Text-to-video generation

Runway lets creators generate short video clips directly from text prompts. You describe a scene, mood, or action, and the AI produces moving footage—dramatically reducing the time from idea to usable video.

2) Image-to-video animation

Creators can upload a still image and turn it into motion. This is powerful for bringing illustrations, product shots, or concept art to life without complex animation work.

3) Video extension and scene continuation

Runway can extend existing clips, helping creators lengthen shots or continue scenes without reshooting. This is especially useful for ads, transitions, and storytelling continuity.

4) Built-in timeline editor

Unlike many AI video tools, Runway includes a timeline-based editor. Generated clips behave like normal video files—trim, sequence, and assemble them into complete projects inside the same platform.

5) Background removal and object isolation

Runway automates tasks like background removal and subject isolation, saving hours of manual rotoscoping and compositing.

6) Multiple variations per prompt

For any prompt, Runway generates variations so creators can explore different camera movements, styles, and moods before choosing the best fit.

7) Fast iteration for creative teams

The generate → review → refine loop is quick, making it ideal for marketing teams and agencies producing many versions of the same concept.

8) Creator-friendly interface

The UI is designed for creatives, not engineers. Prompts, previews, editing, and exports are all accessible without deep technical knowledge.

9) Support for experimental filmmaking

Runway is popular with filmmakers and video artists who use AI to prototype scenes, test visual ideas, or create experimental content.

10) Constant model upgrades

Runway frequently releases improved video models with better motion, realism, and prompt adherence—keeping the platform competitive and driving user retention.

The Technology Behind Runway ML

Tech stack overview (simplified)

Runway ML is built around generative video models combined with practical video-editing systems. Instead of separating AI generation and post-production, Runway connects both into a single workflow:

- Video generation models (text-to-video, image-to-video)

- Motion understanding and temporal coherence systems

- Video editing and compositing tools

- Cloud-based rendering and compute infrastructure

This is why creators can go from prompt to finished video without switching platforms.

How text becomes video

When a user enters a prompt, Runway:

- Interprets scene elements (subject, environment, action)

- Predicts motion, camera movement, and transitions

- Generates a sequence of frames that flow smoothly

- Assembles those frames into a short video clip

Unlike images, video requires consistency across time. Runway’s models are designed to maintain temporal continuity, so objects don’t randomly change shape or position frame to frame.

Image-to-video and scene animation

For image-based generation, Runway:

- Uses the uploaded image as a visual anchor

- Adds realistic motion, depth, and camera movement

- Maintains the original style and composition

- Produces animated footage that feels intentional

This makes static visuals usable in motion-first platforms.

Clip extension and continuity

Extending a clip means the AI must understand what came before. Runway’s system:

- Analyzes the final frames of a clip

- Predicts plausible next motion and lighting

- Generates continuation frames that feel natural

- Preserves the scene’s visual identity

This is critical for storytelling and ad creation.

Editing and compositing layer

On top of generation, Runway includes tools for:

- Timeline-based editing

- Trimming and sequencing clips

- Background removal and object isolation

- Combining AI-generated and uploaded footage

This layer turns raw AI output into usable video projects.

Performance and scalability

Video generation is compute-heavy, so Runway relies on scalable cloud infrastructure to:

- Handle large volumes of generation requests

- Offer faster processing for higher-tier users

- Balance quality, speed, and cost

- Support growing creator demand

Data handling and safeguards

Runway applies usage policies and content controls to reduce harmful outputs while keeping creative freedom intact—important for a broad creator audience.

Why this technology matters for business

Runway’s technology shows how AI video generation becomes valuable only when paired with editing and workflow tools. For businesses, this means faster content production, lower costs, and the ability to iterate visually without traditional production bottlenecks.

Runway ML’s Impact & Market Opportunity

Industry disruption caused

Runway ML helped turn AI video from “research demos” into something creators can actually use for production workflows. The big shift is that you can generate clips (text-to-video or image-to-video) and then edit them like normal footage—so AI becomes part of the creative pipeline, not a separate experiment.

Runway’s newer model generations have also focused on the hardest pain point in AI video: consistency (same character/object across shots, different lighting, different scenes). That’s a key requirement for storytelling, ads, and brand videos.

Market statistics and growth

AI video is growing fast as a category. Market reports estimate the AI video generator market at hundreds of millions today, projecting it to reach multi-billion levels over the next several years (different firms vary in totals, but the direction is consistent).

User behavior and demand signals

Runway’s model upgrades show what users want most:

- Longer clips and the ability to extend scenes (useful for ads and short films)

- Faster generation modes (so iteration becomes practical)

- Better temporal consistency and controllable motion (so outputs feel “directable”)

Geographic presence and creator adoption

Runway is used globally because it’s web-based and fits remote creator workflows. It’s especially popular with:

- Short-form content creators and social teams

- Marketing teams creating many variations

- Filmmakers prototyping scenes and shots

- Agencies producing ads at scale

Future projections

The trend is moving toward “AI filmmaking systems” where you can:

- Keep characters consistent across scenes

- Control motion and camera more precisely

- Generate longer sequences with fewer artifacts

- Blend AI-generated clips with real footage in one workflow

Runway’s Gen-4.5 positioning explicitly highlights improved temporal consistency and controllability, which is exactly where the market is heading.

Opportunities for entrepreneurs

This massive success is why many entrepreneurs want to create similar platforms—especially for niche markets where general tools are not optimized, like:

- E-commerce product videos (fast variants, background changes)

- Real estate walkthrough clips and listing ads

- Local business promo videos and social reels

- Education and training content generation

- Studio-focused pipelines for animation and previsualization

Building Your Own Runway-ML-Like Platform

Why businesses want Runway-style AI video tools

Runway ML proves that video generation becomes valuable when it’s usable end-to-end. Businesses want similar platforms because:

- Video demand is exploding across ads, social, and product content

- Traditional production is slow and expensive

- AI can generate many variations quickly

- Teams need generation and editing in one place

- Subscription + credits create predictable revenue

The real value isn’t just text-to-video—it’s idea → footage → finished video without friction.

Key considerations before development

If you’re planning to build a Runway-like platform, focus on:

- Your core use case (ads, social, film, e-commerce, education)

- Clip length, resolution, and consistency requirements

- Text-to-video vs image-to-video priorities

- Timeline editing and asset management

- Credit or usage-based pricing

- Collaboration for teams and agencies

- Content moderation and export controls

Trying to copy everything at once usually fails. Strong platforms start with one clear video workflow.

Read Also :- How to Market an AI Chatbot Platform Successfully After Launch

Cost Factors & Pricing Breakdown

Runway ML–Like App Development — Market Price

| Development Level | Inclusions | Estimated Market Price (USD) |

|---|---|---|

| 1. Basic AI Video Generation MVP | Core web interface for text-to-video or image-to-video prompts, user registration & login, integration with a single video-generation model/API, prompt → video generation flow, basic video preview & downloads, simple queue handling, minimal safety filters, standard admin panel, basic usage analytics | $120,000 |

| 2. Mid-Level AI Video Creation Platform | Advanced prompt controls (duration, styles, camera motion, aspect ratios), video editing workflows (trim, regenerate, scene-level edits), user workspaces, projects & asset management, queue and priority handling, credits/usage tracking, stronger safety & moderation hooks, analytics dashboard, polished web UI and mobile-ready experience | $220,000 |

| 3. Advanced Runway ML-Level Generative Video Ecosystem | Large-scale multi-tenant video generation platform with high-concurrency pipelines, advanced video editing and compositing tools, prompt and version control, model routing, credits/subscription billing, enterprise organizations & RBAC, detailed observability, robust moderation & policy enforcement, cloud-native scalable architecture | $350,000+ |

Runway ML-Style AI Video Generation Platform Development

The prices above reflect the global market cost of developing a Runway ML–like AI video generation and creative platform — typically ranging from $120,000 to over $350,000, with a delivery timeline of around 6–12 months for a full, from-scratch build. This usually includes video generation pipelines, compute-heavy model orchestration, asset storage and delivery, editing workflows, safety and content policy enforcement, usage metering, analytics, and production-grade infrastructure capable of handling high GPU demand.

Miracuves Pricing for a Runway ML–Like Custom Platform

Miracuves Price: Starts at $15,999

This is positioned for a feature-rich, JS-based Runway ML-style AI video generation platform that can include:

- Text-to-video and image-to-video generation via your chosen AI models or APIs

- Prompt controls (styles, duration, motion) and basic video editing workflows

- User accounts, projects, video history, and asset management

- Usage and credit tracking with optional subscription or pay-per-use billing

- Core moderation and safety hooks aligned with AI video content policies

- A modern, responsive web interface plus optional companion mobile apps

From this foundation, the platform can be extended into advanced video editing, enterprise workspaces, collaborative creative pipelines, custom model hosting, and large-scale SaaS deployments as your AI video product matures.

Note: This includes full non-encrypted source code (complete ownership), complete deployment support, backend & API setup, admin panel configuration, and assistance with publishing on the Google Play Store and Apple App Store—ensuring you receive a fully operational AI video generation ecosystem ready for launch and future expansion.

Delivery Timeline for a Runway ML–Like Platform with Miracuves

For a Runway ML-style, JS-based custom build, the typical delivery timeline with Miracuves is 30–90 days, depending on:

- Depth of video generation and editing features (scenes, motion, regeneration, etc.)

- Number and complexity of AI model, GPU infrastructure, storage/CDN, and billing integrations

- Complexity of usage limits, analytics, and governance requirements

- Scope of web portal, mobile apps, branding, and long-term scalability targets

Tech Stack

We preferably will be using JavaScript for building the entire solution (Node.js / Nest.js / Next.js for the web backend + frontend) and Flutter / React Native for mobile apps, considering speed, scalability, and the benefit of one codebase serving multiple platforms.

Other technology stacks can be discussed and arranged upon request when you contact our team, ensuring they align with your internal preferences, compliance needs, and infrastructure choices

Essential features to include

A solid Runway-style MVP should include:

- Text-to-video or image-to-video generation

- Multiple variations per prompt

- Clip extension or continuation

- Built-in timeline editing

- Background removal or object isolation

- Export-ready video formats

- Credit-based usage system

High-impact upgrades later:

- Character and style consistency tools

- Brand presets and templates

- Team collaboration and approvals

- API access for automation

- Longer clip generation and scene chaining

Read More :- AI Chat Assistant Development Costs: What Startups Need to Know

Conclusion

Runway ML shows that AI video isn’t about replacing filmmakers or editors—it’s about removing friction between imagination and execution. By combining generation and editing in one place, it lets creators explore ideas faster, test more variations, and finish projects without traditional production bottlenecks.

For founders and product teams, Runway ML delivers a clear lesson: the future of creative AI lies in workflow-complete platforms. When AI helps users go from concept to deliverable without context switching, adoption grows naturally and long-term value follows.

FAQs :-

What is Runway ML used for?

Runway ML is used for AI-powered video generation and editing, including text-to-video creation, image-to-video animation, background removal, clip extension, and rapid video prototyping.

How does Runway ML make money?

Runway ML makes money through subscription plans and usage-based credits that cover AI video generation, advanced models, and higher processing limits.

Is Runway ML suitable for beginners?

Yes. Runway ML is designed to be creator-friendly, so beginners can generate videos with simple prompts, while professionals can use it for advanced workflows.

What makes Runway ML different from other AI video tools?

Runway ML combines AI video generation and timeline-based editing in one platform, allowing creators to finish real projects instead of stopping at raw AI clips.

Can Runway ML be used for commercial projects?

Yes. Many creators, agencies, and businesses use Runway ML for ads, social media content, brand videos, and experimental films, depending on plan terms.

Does Runway ML support text-to-video and image-to-video?

Yes. Runway ML supports text-to-video, image-to-video, and video-to-video transformations, giving creators multiple ways to generate footage.

How long are Runway ML video clips?

Clip length depends on the model and plan, but users can also extend clips to create longer scenes.

Is Runway ML good for marketing teams?

Yes. Marketing teams use Runway ML to quickly generate multiple video variations, test creative ideas, and reduce production turnaround time.

Can I build a platform like Runway ML?

Yes. Runway-style platforms can be built by combining AI video generation models, clip continuity logic, editing workflows, and scalable infrastructure.

How can Miracuves help build a Runway-ML-like platform?

Miracuves helps founders build AI video generation platforms with text-to-video pipelines, editing tools, credit systems, and enterprise-ready architecture—allowing faster launch and scalable growth.