Picture this: You’re sipping coffee while casually chatting with an AI that understands not just your voice, but your sarcasm, gestures, screenshots, and even your dog’s bark in the background. Wild? Not anymore. Welcome to the age of multimodal AI — where communication meets cognition, and machines aren’t just replying, they’re comprehending.

For creators, tech founders, and digital hustlers, this isn’t a “maybe later” thing — it’s a now-or-never game. The GPTs of the world are evolving faster than you can say “pivot,” and platforms like Gemini, Claude, and Meta’s LLaVA are setting new expectations for how smart (and useful) AI can be across media formats. If your platform doesn’t “see,” “hear,” or “feel” data the way humans do? You might as well be flipping a pager in the TikTok era.

That’s why we’re diving into the how of building a Multimodal AI platform — not just the buzz, but the brain, backend, and business logic behind it. And hey, if you’re planning to launch your own AI beast, Miracuves is right behind you with scalable app clones and battle-tested dev power.

Picture this: You’re sipping coffee while casually chatting with an AI that understands not just your voice, but your sarcasm, gestures, screenshots, and even your dog’s bark in the background. Wild? Not anymore. Welcome to the age of multimodal AI — where communication meets cognition, and machines aren’t just replying, they’re comprehending.

For creators, tech founders, and digital hustlers, this isn’t a “maybe later” thing — it’s a now-or-never game. The GPTs of the world are evolving faster than you can say “pivot,” and platforms like Gemini, Claude, and Meta’s LLaVA are setting new expectations for how smart (and useful) AI can be across media formats. If your platform doesn’t “see,” “hear,” or “feel” data the way humans do? You might as well be flipping a pager in the TikTok era.

That’s why we’re diving into the how of building a Multimodal AI platform — not just the buzz, but the brain, backend, and business logic behind it. And hey, if you’re planning to launch your own AI beast, Miracuves is right behind you with scalable app clones and battle-tested dev power.

What Is a Multimodal AI Platform?

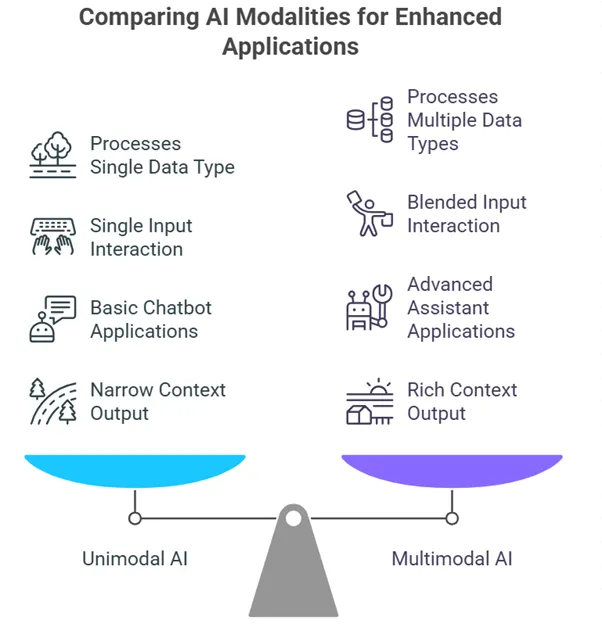

Multimodal AI combines inputs from multiple data types — think text, audio, image, and video — to generate richer, more context-aware outputs.

Instead of just understanding your typed prompt, a multimodal AI can:

- Analyze a selfie and comment on your mood

- Watch a tutorial and summarize it

- Listen to an audio clip and detect tone

- Process documents with embedded graphs and provide analysis

It’s basically the intersection of natural language processing (NLP), computer vision (CV), and sometimes even haptics and sensor data. Leading models like OpenAI’s GPT-4o, Google’s Gemini, and Meta’s ImageBind are already smashing benchmarks here.

Read More : How to Develop Google Gemini Alternative

Core Components of a Multimodal AI Platform

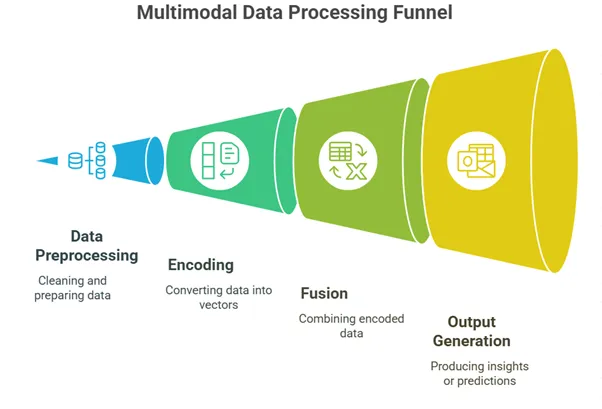

1. Data Ingestion Layer

This is your raw intake pipe. You need to handle and normalize different data streams — from YouTube videos to PDF scans to whispered voice memos.

- Image: Preprocess with OpenCV

- Audio: Transcribe using Whisper or DeepSpeech

- Text: Clean and tokenize

- Video: Segment keyframes, extract metadata

2. Feature Extraction Engines

Before merging, each modality is processed through its own encoder:

- Text → Transformers (BERT, RoBERTa)

- Image → CNNs, ViT (Vision Transformers)

- Audio → Spectrogram + RNN/CNN/Transformer

- Video → Clip-by-clip embedding (e.g., with TimeSformer)

Each modality gets vectorized — think of it as translating every media type into a shared language: math.

3. Fusion Layer

The heart of your platform. Here’s where it gets spicy.

There are 3 common fusion strategies:

- Early Fusion: Merge raw features early (high risk, high reward)

- Late Fusion: Analyze separately, combine results (safe but shallow)

- Hybrid Fusion: Blend both approaches — best of both worlds

Use attention-based mechanisms (like cross-modal transformers) to allow features to interact dynamically.

4. Model Architecture

Use foundational models with fine-tuning:

- Encoders: For each modality (text/image/audio)

- Fusion Core: Usually a transformer variant

- Decoder/Classifier: Tailored to your platform’s task (chatbot, summarizer, recommender)

Frameworks like HuggingFace Transformers, TorchMultimodal, and OpenVINO are your best friends here.

5. Training Stack

You’ll need GPU horsepower (or TPU), lots of data (like LAION-5B, AudioSet), and robust evaluation benchmarks (VQA, MME, etc.).

Use:

- Self-supervised learning

- Contrastive loss functions

- Few-shot or zero-shot tasks

Use Cases of Multimodal AI (with Real Examples)

Content Creation

- Tool: ChatGPT-4o

- Function: Generate blogs from voice memos + screenshots

- Why It Works: Understands context beyond words

E-commerce

- Tool: Amazon VisualSearch

- Function: Snap → Shop

- Why It Works: Combines image + user query for precise results

EdTech

- Tool: Khanmigo (powered by GPT)

- Function: Analyze math problems from photos + tutor via voice

- Why It Works: Offers interaction across formats

Healthcare

- Tool: DeepMind’s MedPaLM-M

- Function: Medical report parsing + X-ray analysis

- Why It Works: Reduces diagnostic errors

Business Model Options for Multimodal AI Platforms

1. Freemium → Pro SaaS

Let users play with limited features, charge for:

- Additional modalities

- API access

- Custom model tuning

2. B2B Licensing

Offer AI modules as white-label solutions.

3. Enterprise Custom Solutions

Charge for verticalized AI (e.g., retail, finance, medtech) with compliance layers.

4. Ads & Affiliate Integrations

Monetize through smart ad placements based on context-aware interaction.

Development Stack: Tools & Frameworks You’ll Need

- HuggingFace Transformers (multi-modal prebuilt models)

- OpenAI APIs / Gemini Pro

- PyTorch/TensorFlow

- TorchMultimodal

- FastAPI or Node.js for backend

- Firebase, Supabase, MongoDB for storage

- Docker + Kubernetes for scalability

Challenges You’ll Face (and How to Outsmart Them)

Data Alignment

Issue: Matching audio to visual to text can be messy.

Fix: Use timestamps and contextual cues (e.g., scene transitions).

Model Overhead

Issue: Multimodal models are resource-hungry.

Fix: Quantization, distillation, edge inference.

Bias & Misinterpretation

Issue: Multimodal AI can amplify stereotype from multiple channels.

Fix: Use diverse datasets, transparent feedback loops.

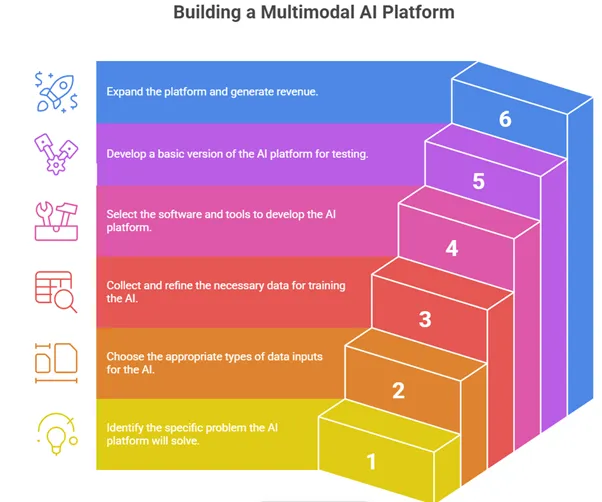

Roadmap for Building a Multimodal AI Platform

- Define Use Case: Productivity tool? Creator aid? Commerce assistant?

- Pick Modalities: Text + Image? Or full stack (Text + Image + Audio + Video)?

- Collect & Clean Data: Avoid garbage-in-garbage-out.

- Choose Frameworks: HuggingFace, TorchMultimodal, OpenAI, etc.

- Build MVP: Keep it lean, validate with real users.

- Scale and Optimize: Add features, optimize inference time, monetize.

Conclusion

Multimodal AI isn’t just a trend — it’s the next UX evolution. From visual search to interactive learning, it reshapes how humans and machines collaborate. Yes, it’s complex. Yes, the tech is intense. But the potential? Absolutely limitless. The best time to start? Yesterday. The second-best? Today.

At Miracuves, we help innovators launch high-performance app clones that are fast, scalable, and monetization-ready. Ready to turn your idea into reality? Let’s build together.

FAQs

1. What makes an AI platform multimodal?

It can process and generate responses across multiple formats like text, audio, image, and video — not just one.

2. Can I build one without AI expertise?

You’ll need some help. Frameworks like HuggingFace lower the barrier, and dev partners like Miracuves make it plug-and-play.

3. Is multimodal AI expensive to run?

Yes, due to heavy models and GPUs. But with quantization, serverless APIs, and edge computing, it’s becoming cheaper.

4. Are there open-source multimodal models?

Yes — check out OpenFlamingo, LLaVA, and Meta’s ImageBind.

5. How is this better than ChatGPT alone?

ChatGPT is powerful but limited to text. Multimodal AI “sees” and “hears” too — offering richer interaction.

Related Articles :